Past Radiation Monitoring & Comments

SW Oregon Radiation Monitoring for the Coos Bay &

North Bend area

(See also: current/recent monitoring)

See Space Weather

for current solar/storm news. Energy News

(check beyond their often lurid headlines) has slacked off in 2016.

We can follow the demise and poisonous death throws of

the nuclear industry via Ring

of Fire, Common

Dreams, Truthdig

and others.

You're at: https://57296.neocities.org/rad-2.htm

Contents

Last worked on: May 16th, 2024

fast find>> Preamble,

Air

sampling, Air update, Solar flares,

Graphs,

CPMs & Geiger counters,

Food,

Graphing,

About,

Beaches,

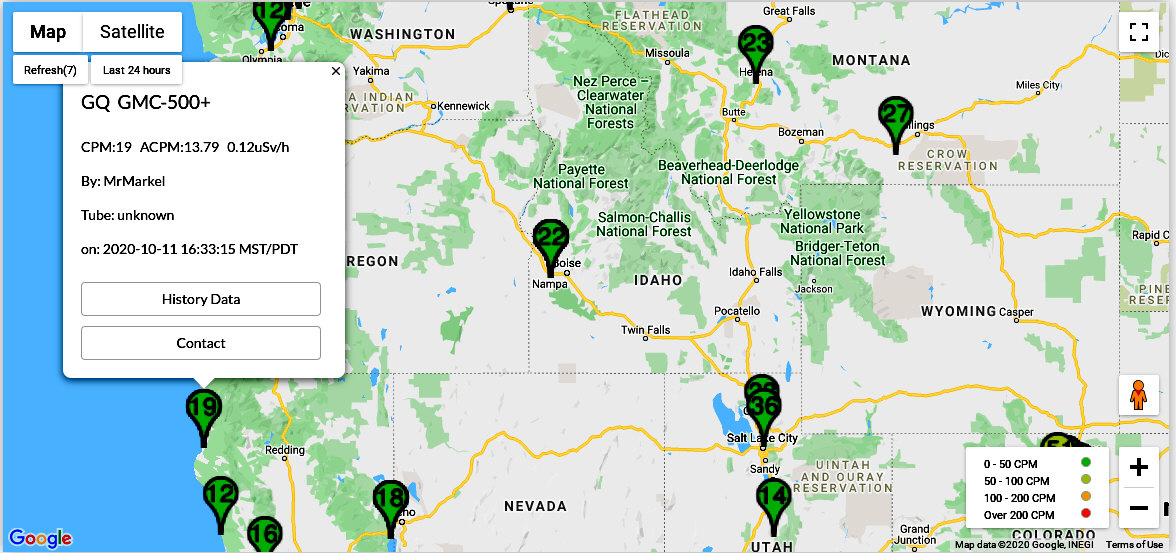

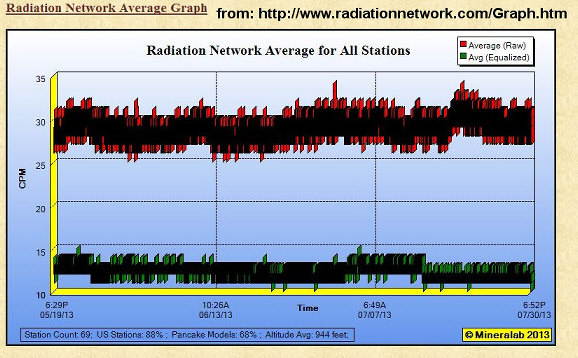

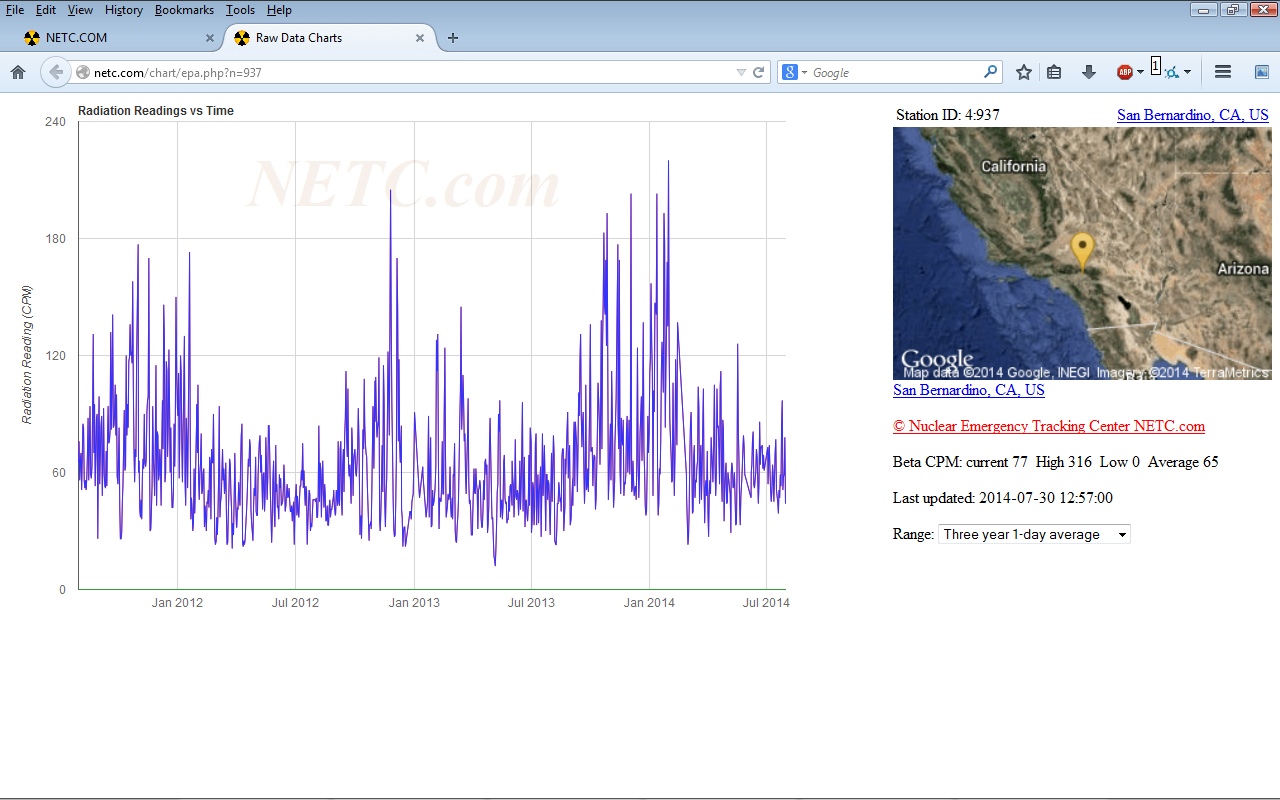

G-M tube failure, Networks,

My

choice of units, Statistics,

Question,

Submersion test, A

typical day graph, Reminders,

Notes & commentaries, Fukushima,

Medical

technetium-99m,

Radium, My

graphing pgm, Network suggestions, Draft

letter, Fukushima-3,

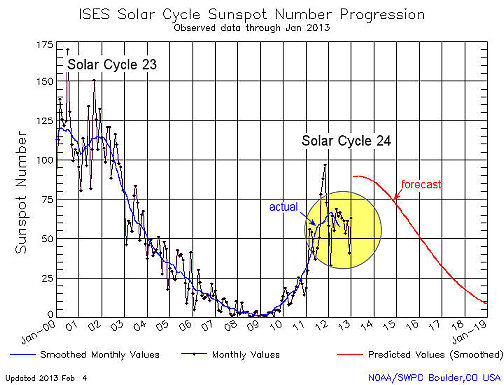

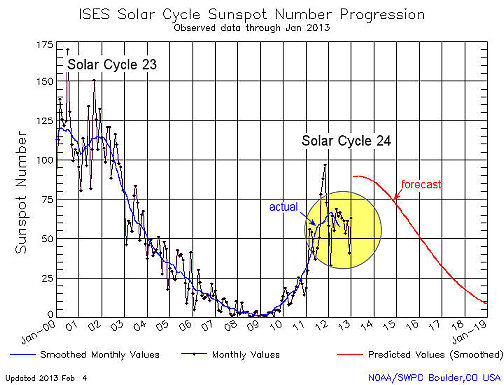

5/16/2024 update: Solar Cycle 25 is more active than

predicted. Here's

a current summary and my earlier comments.

* (7/18/2019): Today's (Brad Friedman) "Bradcast"

(stream it or download the MP3) brings the dismaying news that the Trump

administration (among its many other environmental outrages) is trying

to cut way back on the safety and supervision of our aging nuclear power

plants.

* (6/30/2019): :-(

It's turned out that the SBO nature of the Fukushima

disaster left both the mainstream and alternative media that I follow with

no lasting impression. In the news this past week have been unveiled international

threats --deliberately leaked stories about Russia and the United States

having planted destructive computer viruses in each other's power grid

system controllers. I saw nothing about the consequences of multiple

nuke

power plant melt downs (hundreds of them!) should those viruses get

activated.

My "Background Radiation"

Graphs

(start out rather humbly.)

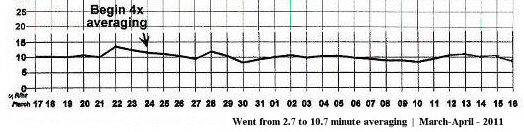

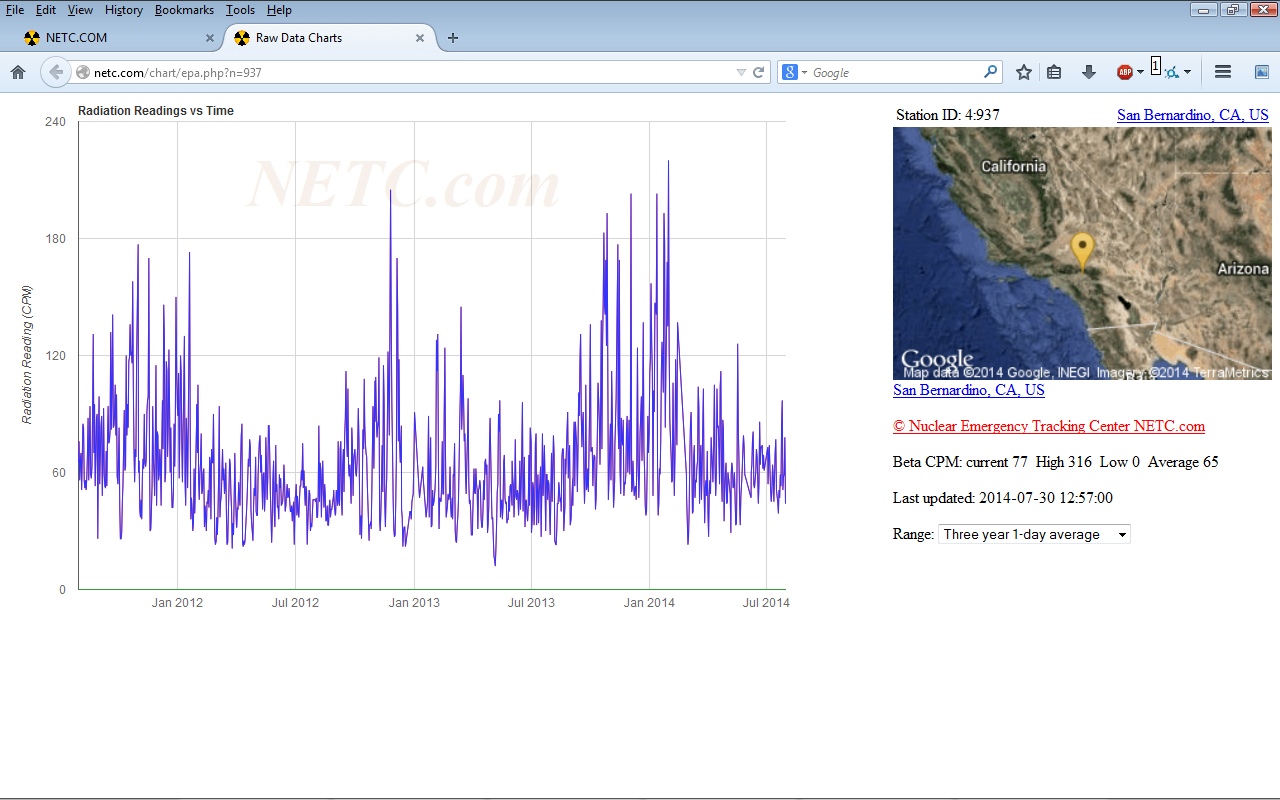

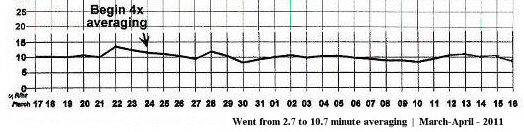

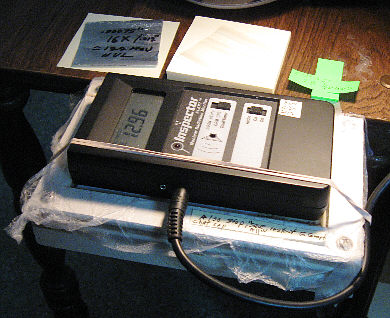

March-April 2011 (10 uR/hr ~ 35cpm with my Medcom Geiger

counter, 15.61cpm with my M4011 device, and 14.82cpm with my SBM-20 G-M

tube device.)

The Fukushima disaster occured on March 11th.

* These early graphs are based on

averaged uR/hr readings, using a Radex 1503 Geiger counter with an SBM-20

tube. I would repeatedly wait out the 1503's internal 2.7 minute averaging

cycle, note the reading, then average those averages. The 1503 will not

display CPMs.

22nd: That peak is probably no more significant

than the next. I was only taking 2.7 minute counts (+/- 7.2cpm or +/- 2uR),

but went to 10.7 minute averages on the 24th (+/-3.6cpm). (I was using

twice the "standard error", based on the square root of the assumed total

count.)

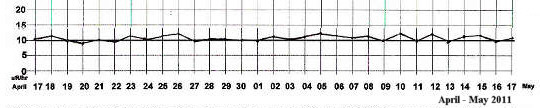

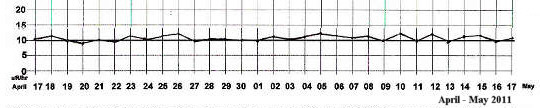

April-May 2011

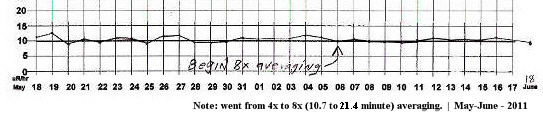

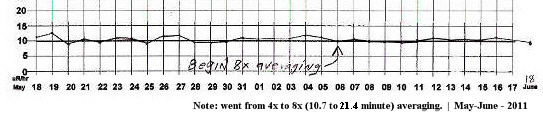

May-June 2011

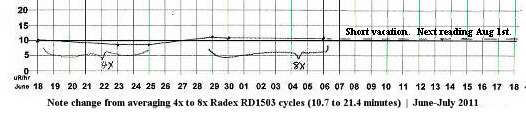

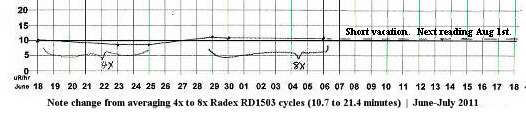

June-July 2011 (uR/hr)

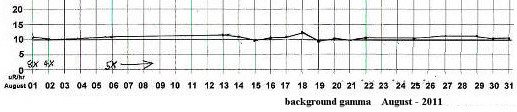

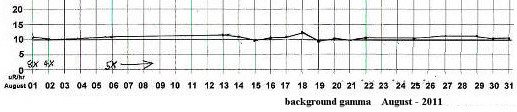

August 2011

(Dropped back to 5x averaging on August 6th. Note that

days pass between readings.)

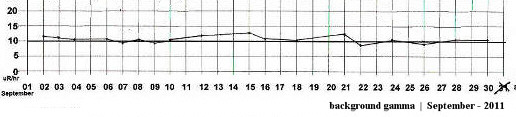

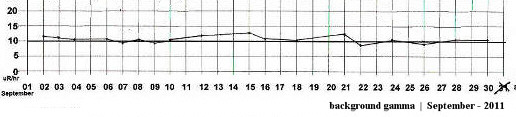

September 2011

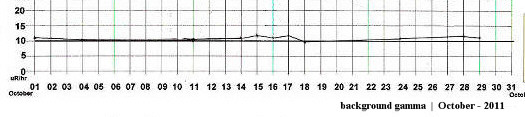

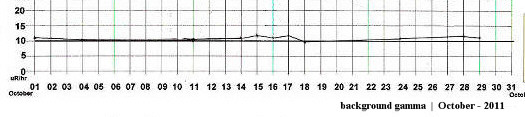

October 2011 (uR/hr)

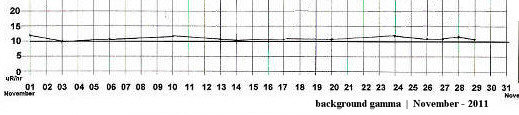

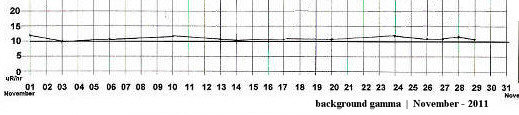

November 2012

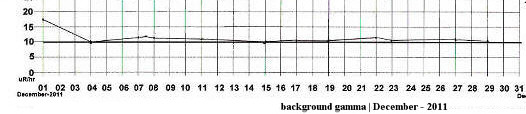

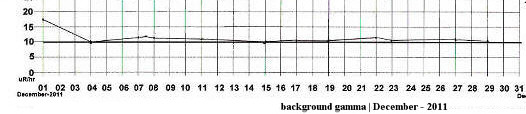

December 2011

(Dropped back to 5x averaging on August 6th. Note that

days pass between readings.)

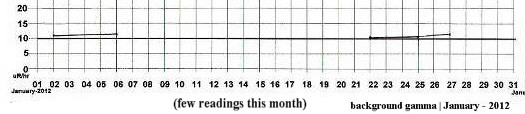

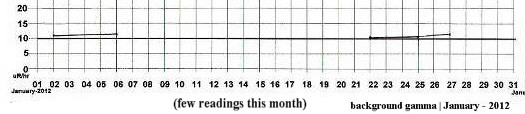

January 2012 (uR/hr)

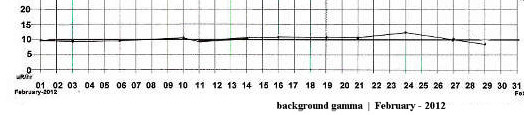

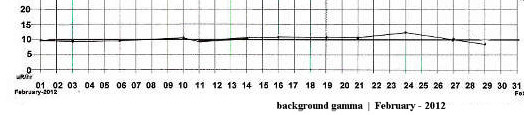

February 2012

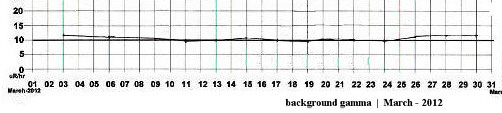

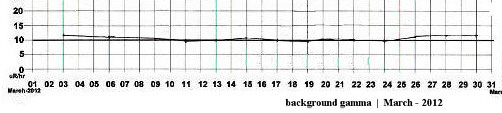

March 2012

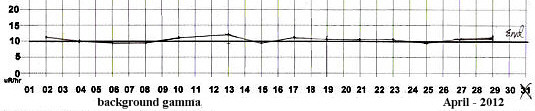

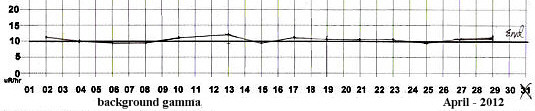

April 2012 (uR/hr)

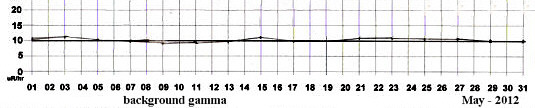

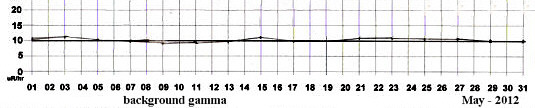

May 2012

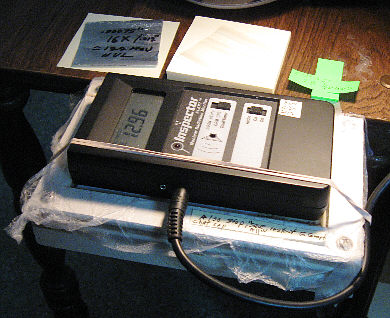

The graphs beyond this point change scale and are

primarily calibrated in (Medcom "Inspector") CPM,

sometimes with supplemental uR/hr equivalents on the

right margin.

(10 uR/hr = about 35cpm with the Medcom Geiger counter)

June 2012

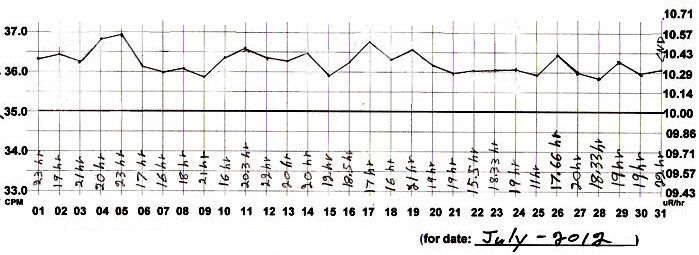

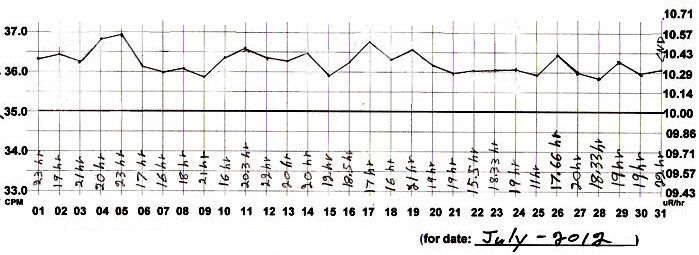

July 2012

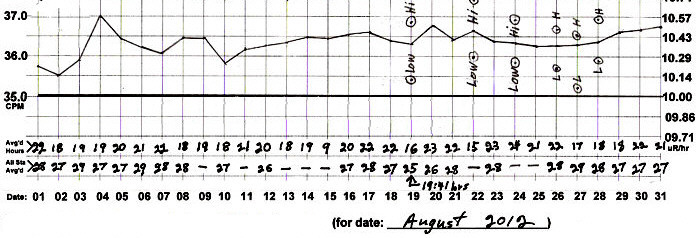

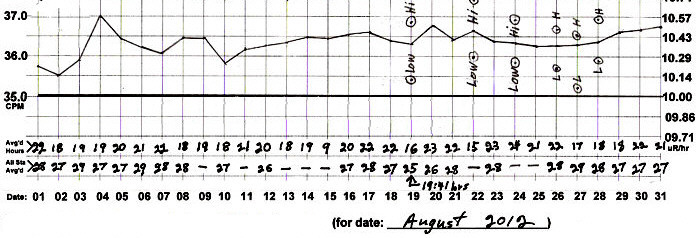

August 2012

September 2012

* That increase in variation after the 11th is due to

reducing the averaging period from hours to 100 minutes. We expect +/-0.6cpm

of jiggle at this level of detection and averaging of "Poisson distributed"

radiation data.

October 2012

November 2012

December 2012

January 2013

23rd: Confirmed high BG

with 2 extra mid-day 100min counts; 26th: +24.5/14.4cpm

mid-day AFs. (Mid-day 10min BGs

of 38 & 41.2cpm.)

February 2013

March 2013

April 2013

May 2013

June 2013

July 2013

August 2013

1st: * See my notes about

elevation tests with a Geiger counter.

26th: * Those large dots are 100 minute

averaged readings made with my "Inspector" Geiger counter (sealed inside

a thin "ZipLoc" plastic bag). Note that the last dot is double, the lower

blue dot being the count with beta radiation blocked. The smaller black

dots are 26.66 minute Radex RD-1503 (SBM-20) averages, taken over a period

of about 30 minutes, expressed in "Inspector" (LND-7317) nominally "equivalent"

(for gamma) CPMs, for which totals, the standard error is +/-2.6cpm.

* It appears that the large black dot, 5-day whoop-de-do

on this graph is all about beta radiation --the stuff I wait for to go

away before testing my air filters for residuals. There was no rain

until until the 25th, so the invasive beta (radon daughters, presumably)

might have been driven out of all the sand around here by our sunny warm

days of late. (The 26th was especially warm, but also windy and dry.)

* On the 25th I ran inside and outside tests which included

using my old Radex-RD1503 Geiger counter. Like in the past, I saw

no increase when I took it outside (actually getting a slightly lower reading),

whereas the Inspector, with its mica window, reads about 11% higher in

the same location. This is most likely because the RD1503 is less sensitive

to beta radiation.

* It started raining again

while I was running the outside RD-1503 count, but there was no effect

upon the last 5 readings^. Nor did the rain and steady drizzle of the 25th

seem to affect the "Inspector's" readings.

^ The RD-1503 has neither a long count timer nor a data

port, so I have to take a reading every 3 minutes in order to build up

a longer average --than I can get from its native 160 second cycle. However,

it still seems stable and in calibration, so I'll use it for my outside

counts. (The exercise will do me good :-)) My purpose in this is to

possibly get an earlier warning, should fall-out or volatilized gamma emitters

from the beach begin accumulating. (We're fairly close to the bay and Pacific

ocean.)

* Note that the last big dot is double. The lower blue

one is the Inspector's average with beta blocked. Laying the beta blocked

"Inspector" Geiger counter on the ground gave nearly the same reading:

36.33cpm. Removing the beta shield ran it up to 44.37cpm (off the chart,

but that's mild, compared to some of the beta CPMs we read about elsewhere

after "rain-outs" (or "rain-ups" --not sure).

* Having reverted to placing my old Radex-RD1503 Geiger

counter out in the yard (34 inches above ground) for a 26.66 minute averaged

count, I'm multiplying the indicated "uR/hr" by 3.5 for a rough "Inspector"

Geiger counter equivalent.

* I deal with our radon CPMs to some extent when I

do air filter readings, recording the initial value and their signature

rate of decay.

28th: * No Radex outside check (small dot) today.

29th: * I ran the outside Radex Geiger counter (SBM-20

tube) check twice, once open, again with 1/8" aluminum shielding: no difference--but

that was anticipated, since past outside versus inside Radex checks showed

very little difference. After a series of bench tests with potassium chloride

and varying aluminum shielding, it appears that the Radex behaves as if

it has about .009" of aluminum shielding (over an imaginary mica window).

The

impression I get is of very weak (under 100KeV) beta at 34 inches off the

ground --which changes my gnotion of what "half value layer" (HVL) means

with respect to beta. I thought that the intensity of beta electrons was

only "depopulated" to 50% by an HVL (at whatever associated KeV), but that

the energies of the remnant beta stayed the same. (Obviously, gamma behaves

on the depopulation model, since passing light through a 0.3d (50%) neutral

density filter does not change its color, and since useful gamma

spectrometry can be performed on the radiation escaping (say) the large

cadaver of an ocean creature, or the living body of a "nuclear medicine"/imaging

patient.)

September 2013a

1st: * No Radex readings today.

September - 2013b

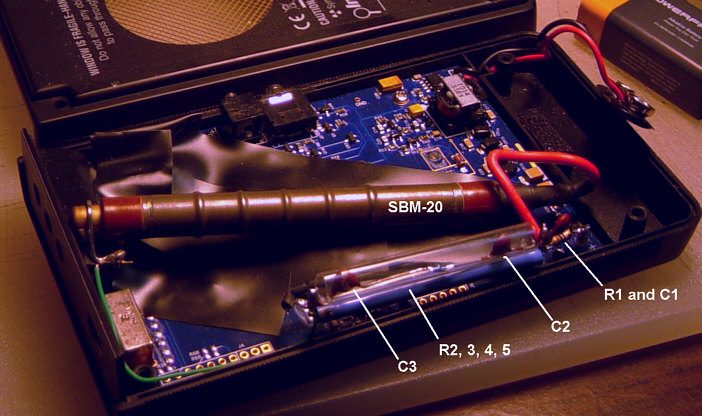

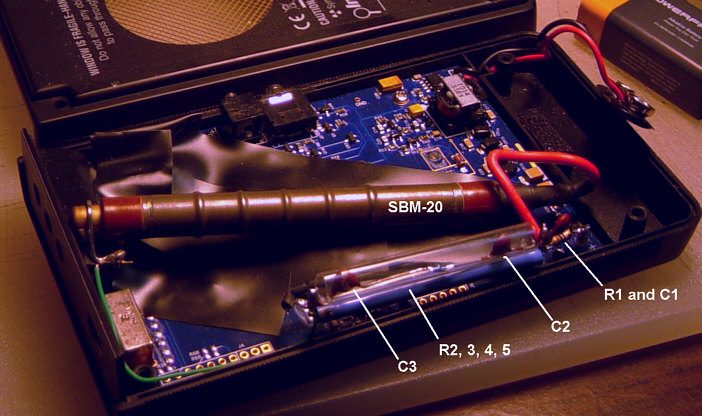

* 8th: Added this 2nd September graph, based on SBM-20

Geiger-Mueller tube sensitivity --after the LND-7317 G-M tube in my Medcom

"Inspector" Geiger counter failed. An SBM-20 runs at 15cpm in a 10uR/hr

gamma field (from Cs-137), while an LND-1317 runs at 35cpm.

* 13th: A stray cat delivered 5 kittens under

our deck, which has been taking up a lot of time --getting them in, taming,

arranging for spay/neuter and adoption --so: my readings are down to little

more than once per day for a while.

* Please note that with the smaller tube and shorter

(cumulative) counting period, I've more than doubled the "noise"/jaggedness

of my graphing. The "standard deviation" is +/-0.9cpm (or let's say: +/-1cpm

--at an average of 15cpm) --and it shows.

October - 2013

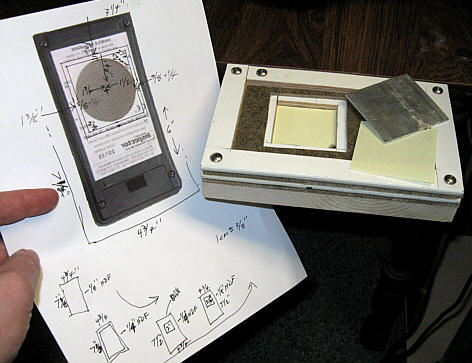

A map of our two field stations, our fixed outdoor Geiger counter and

our portable Geiger counter

*5th:

Was able to install a Russian SBM-20 G-M

tube in my failed Medcom "Inspector" Geiger counter. I've got about 43%

of the Inspector's original gamma sensitivity, plus I've lost the ability

to detect alpha and soft beta radiation --but I can once again do automatically

timed counts plus participate on-line with

Radiation

Network.

*9th: We selected two remote locations for 30

minute monitoring counts: 4.7 miles in from the mouth of Coos Bay (EBR

= Empire Boat Ramp) and two miles south of the mouth (SB = Sunset

Bay). We'll check these high tide spots throughout the year. (See 2015

entry, just below.)

*27th: Resumed doing periodic air filter monitoring,

but drawing about 4.4 cubic meters of air over 15 minutes --instead of

the old 10 cubic meter standard. The buildups in the filters were weak,

with 50% more CPMs (at about 2x background) in the draw taken 2 inches

off the ground, than in the filter drawn at 12 feet above ground level.

Of necessity, only a 10 minute initial reading, then another 40 minutes

later, were taken. Consequently, the possible errors were large, but the

decays were: to 69% (2 inch high draw) and to 43% (12 foot high draw) over

40 minutes.

*28th: Tested the two air filters of the 27th,

finding their gamma and hard beta radiation to be indistinguishable from

the background level.

*5/25/2015: Have mostly discontinued the remote

30 minute counts in favor of 2.7 minute averaged roving beach wanders (with

our dog Sammy). A shirt pocket Geiger counter (Radex RD1503) is set to

audible, so the ear sensitivity to anomalies adds considerably to the nominal

+/-16% sigma/standard deviation of the display (which is checked periodically).

Have added Bdrf = Bastendorff (just north of Sunset Bay).

**11/2/2020 update: The (Coos River) Chromium

mining/mill having shut down some years ago, I now only do "beach wanders"

at Bastendorff Beach (Sunset Bay being redundant). Instead of a long

count with a tripod mounted Geiger counter, these are 10 minute walks along

recent high tide "froth lines", listening for anomolous clicking indications

of any hot particles ("fuel fleas"), then recording the averaged CPM of

my gamma sensitive Geiger counter at about 30 inches above the sand. I

graph these counts as a percentage of what's been my base-line normal at

the house --for my SBM-20 tubed Medcom "Inspector" Geiger counter.

November - 2013

*EBR, SB and Home: see note on the October graph.

*28th: Ran an air filter

draw, finding the initial CPMs reading clearly at 4.5x background in the

test jig. The decay rate (to 73% at 20 minutes) had a radon daughter profile.

The filter read at background, 24 hours later.

December - 2013

5th: * Began monitoring with the new outdoor station.

11th: * Began 60 minute counts at home (30 minutes

at remote Empire Boat Ramp and Sunset Beach stations).

16th: * Began 24/7 air sampling at low velocity

(1.36 cubic meter/day, instead of sporadic draws of 4.4 cubic meters in

15 minutes).

19th: * With the approach of winter storms and

continuous air sampling, the intake was lowered to 9.6 feet above the ground.

20th: * Have both a beach sand sample and 3 air

filters (1st low velocity and 2 old filters) on their way for gamma spectrometry.

24th: * The new outside monitoring station seems

stable. Will begin running it continuously on-line with Radiation Network.

26th: * Stopped 24/7 air sampling. Filter was

soaked from condensation.

* EBR and SB: see note on the October graph.

January - 2014a

January - 2014b

11th: * I'm not satisfied with any of the graphing

modes I've tried (and see below).

22nd: * Did two 65 minute (19.1 cubic meter)

filtered air draws today, getting unusually high initial radon/daughter

readings (9x and 12x background) which decayed to 51% and 50% in 40 minutes

(gamma and hard beta only). They'll be examined with gamma spectrometry.

26th: * Air filter update.

The 9x filter checked

out as having no residuals. (Thanks, Tom.) We're still waiting for

a report on the 12x filter. (2/22: I never did get a final analysis

on the 12x, but the preliminary graph implied that the lab (not Tom's)

didn't have enough substance on the filter to get readings sufficiently

above background.

I thank both labs for having a go at my air filters

and I'll be quadrupling my air draws.)

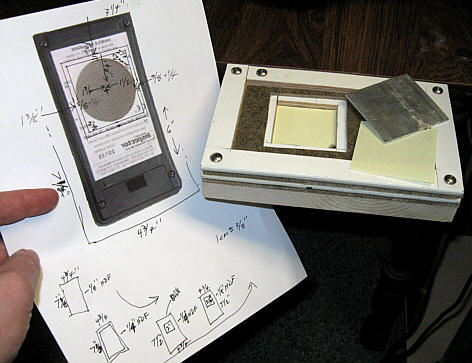

29th: * I'll be going from 1 inch to 2 inch diameter

air filters here. I get about the same volume rate (limited pumping and

plumbing capacity) but the flow rate per square inch will be 25% as much

(better?) and the filter size is a better match to a 2" scintillator crystal.

30th: * There was no evidence of the M6 solar

flare today at 08:17 PST (16:17 UTC/GMT) in my minute-by-minute counts.

However, there was an isolated, 1 minute, greater than 4 sigma of 33cpm

at 07:35 today.

* I'll continue watching for and noting

any unusual short term spikes at my station. Unless grouped, isolated spikes

of less than 4 standard deviations/"sigmas" will be ignored, since 3 sigma

counts occur several times per day --by statistical probability alone.

At a station average of 16cpm, a 4 sigma spike would come in at 32cpm (by

my calculation).

February - 2014

* Days during which my minute-by-minute CPMs reached 33cpm

or higher: 2/1 (33), 2/12 (33), 2/13 (33), 2/14

(34), 2/16 (33).

22nd: * We had two dry sunny days in a row, so

I checked the beach stations and did a 40 cubic meter air draw, getting

what's apparently radon/daughters (40 minute half-life) with an initial

reading of 5x background.

23rd: * After 39 hours, the air filter read at

a normal background level. I'm sending this filter to Tom's lab for

gamma spectrometry.

March - 2014

2nd: * Began adding 12 hour daytime though evening

averages as unconnected small black dots. (Might not catch every day's

count.)

13th: * An isolated single minute count of 33

at 21:42 hours.

20th: * An isolated single minute count of 33

at 11:19 hours.

22nd: * Gave up on 24 hour averages (as being

not much different than 12 hours) and went to all 12 hour --and all connected

on the graph.

28th: * Whoops: shut down the computer before

I saved my CPM data --but it was running 15-something, per usual. (My dog's

fault! :-)

April - 2014

13th: * There was an isolated one minute count of

32 at 20:34 hours PDT. Presumably, this was a random high (re: "Poisson

distribution").

14th: * A rather high reading at the Empire Boat

Ramp today, but not at Sunset Beach. EBR was higher in February as well.

* "100%" was (arbitrarily) normalized to the last week

in January --which appears to have been a few percentage points higher

than the annual normal average. I'll stick with it never-the-less.

15th: * There was an isolated one minute count

of 33 at 14:17 hours PDT.

16th: * There was an isolated one minute count

of 34 at 19:49 hours PDT.

20th: * Whoops: forgot to turn the data logging

program on today --sorry.

May - 2014

9th: * There was an isolated 32cpm one minute spike

at 23:45 hours PDT.

12th: * Got another high reading at the Empire

Boat Ramp today --though not a record, and Sunset Bay was fairly high as

well. Both readings were above clearly delineated (by detritus and seaweed)

high tide/wave wash lines.

* Again: I originally expected

Sunset Bay to be higher, since it opens upon the ocean --particularly

as we anticipate the Fukushima plume's arrival. It might be that the Empire

Boat Ramp station (4.7 miles up into the outflow of the Coos River) is

located on dredgings which happen to be more radioactive. Another possibility

is forest run-off, abandoned and current mining operations further up the

river.

* I'm torn as to whether I should always station on

the same maximum tide line spot, or follow the most recent/apparent high

water line as it advances and recedes. If I keep so moving the station,

I might cross a vein of thorium rich sand and get a false impression about

encountering evidence of the Fukushima plume. What's your opinion?

* I also did an air draw today. It was supposed

to be 2 hours (40 cubic meters), but I drifted off to sleep and it ended

up being a 140 minute draw (47 cubic meters). The initial N95 filter activity

was fairly low (4.4x background) and it decayed at a classical radon daughter

rate (to 48% in 40 minutes, counting gamma and beta only). After 49 hours,

the filter checked at background, so there were no residuals that I can

detect.

16th: * There was an isolated 33cpm one minute

spike at 15:06 hours PDT.

18th: * No birds at the feeders this morning --very

unusual, but has happened once before. We normally have 15 to 20 pigeons

and dozens of chirping small birds (sparrows, blackbirds, cow birds, crows).

They must have come later, but not enough of them to scour the bottom of

the elevated small bird feeder.

19th: * Up to 15 pigeons trickled in, but initially,

no small birds again.

22nd: * There was an isolated 32cpm one minute

spike at 09:01 hours PDT. (Will begin noting spikes at 33cpm after this

entry.)

24th: * Sorry! I flat forgot to turn the data

logging on today.

* Back to normal on pigeons, small birds still

somewhat diminished.

June - 2014

05th: * No pigeons, sufficient small birds at the

feeder.

06th: * 6 pigeons, sufficient small birds

at the feeder.

07th: * 12 pigeons, normal number of small birds.

10th: * Pigeon and small bird count back to normal.

(Turns out a family of feral cats moved in.)

* Did an air draw today: 40 cubic meters. The

initial N95 filter activity was quite low (1.7x background). After 24 hours

the filter checked at background, so there were no residuals that I could

detect.

July - 2014

10th: * There was an isolated 34cpm one minute spike

at 12:02 hours PDT.

12th: * Did our monthly 40 cubic meter air draw

--through a small N95 filter. The initial CPM was exceptionally low: 56%

above background, which then decayed exceptionally fast --to 15% in 40

minutes --perhaps because my deviation errors ganged up on me this time.

(I'll try to get this air draw data onto the above graph, which will be

periodically posted to: http://radviews.com.)

27th: * Isolated 34cpm spike at 10:37 hours PDT.

August - 2014

September - 2014

October - 2014

November - 2014

December - 2014

January - 2015

February - 2015

March - 2015

April - 2015

% deviation from 293.2o Kelvin G-M tube temperature

(-5%) from 4/19/2015

May - 2015

5/25b: * I've discontinued my remote

station 30 minute beach and bay counts in favor of "beach wanders"

(with our dog Sammy), while carrying a beeping Radex-RD1503 in my shirt

or jacket pocket (for pretty much of a gamma count only). While the display

count is only averaged over the previous 160 seconds --for a whopping standard

deviation of +/-16% (+/-2.5cpm), the ear is oddly more sensitive to changes

than that would suggest. The advantage is in greatly expanding the area

I'm monitoring, but only for detecting something gross --like "hot particles"

or a hot object, since there'll no longer be a fixed station base line.

However: should I log a significant average increase or an anomaly, I'll

do a better count. I've added station Bastendorff ("Bdrf")

--between

Sunset and the Empire Boat Ramp.

June - 2015

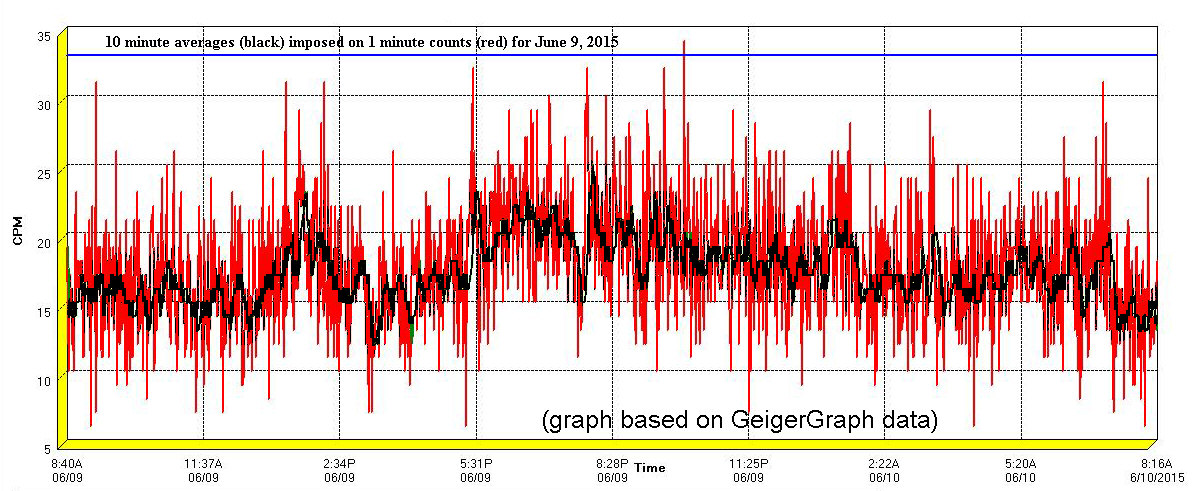

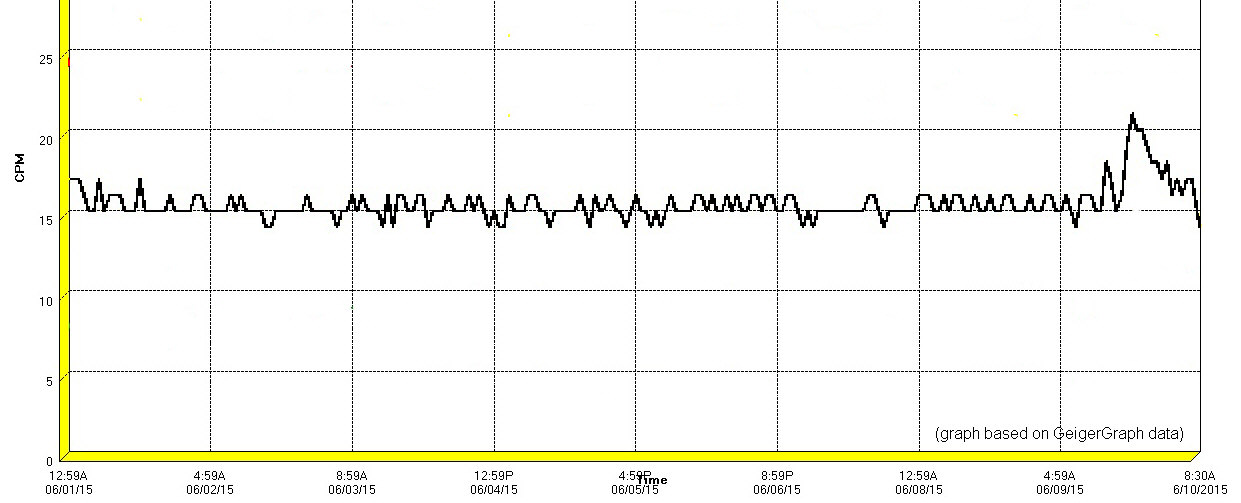

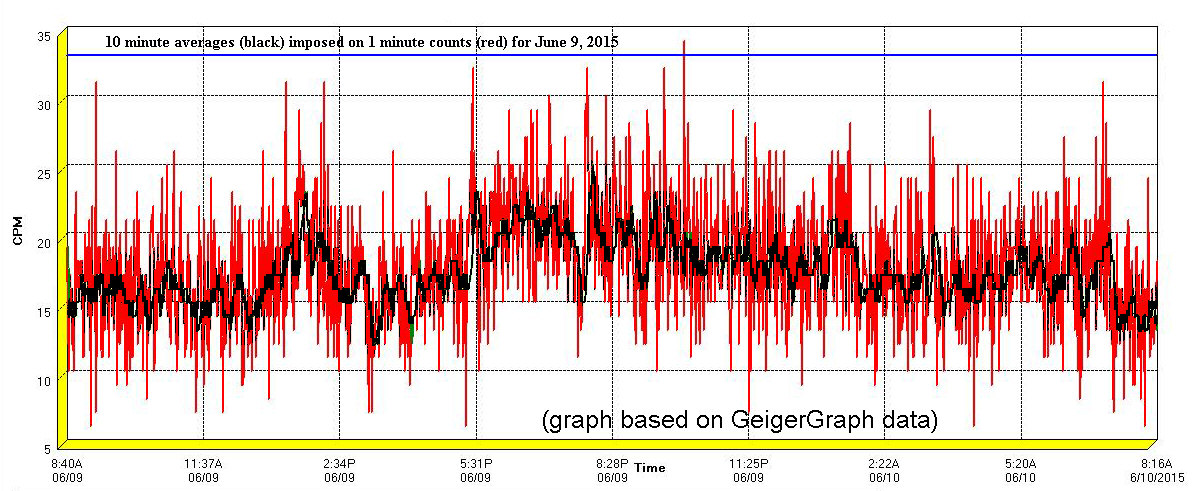

6/9b: * I don't know what these peaks are about,

but that 24 hour average is a whopping 17.7 standard deviations ("sigma")

over the 15.77cpm norm here (at least: the way I figure it). As shown

below, the day's 60 minute averages, 10 minute averages and one minute

counts are no slouches either. (Ctrl+ to enlarge the images.)

One and Ten Minute Averages on June 9th, 2015

(Ctrl+ to enlarge the images.)

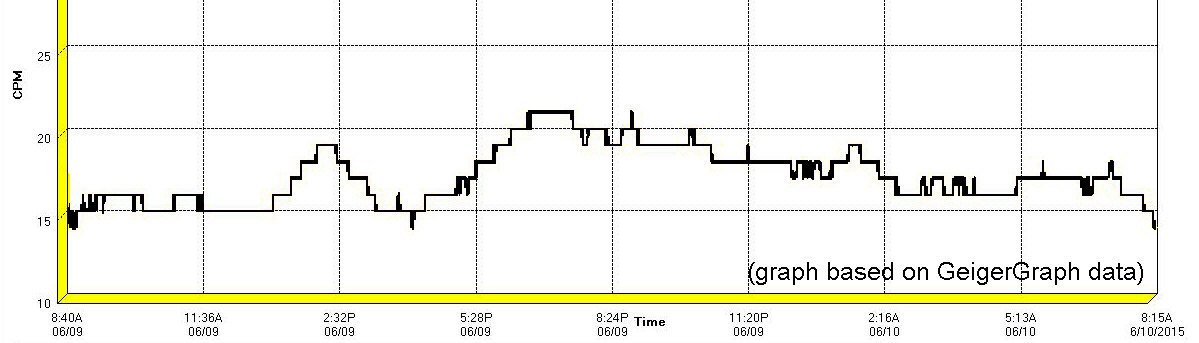

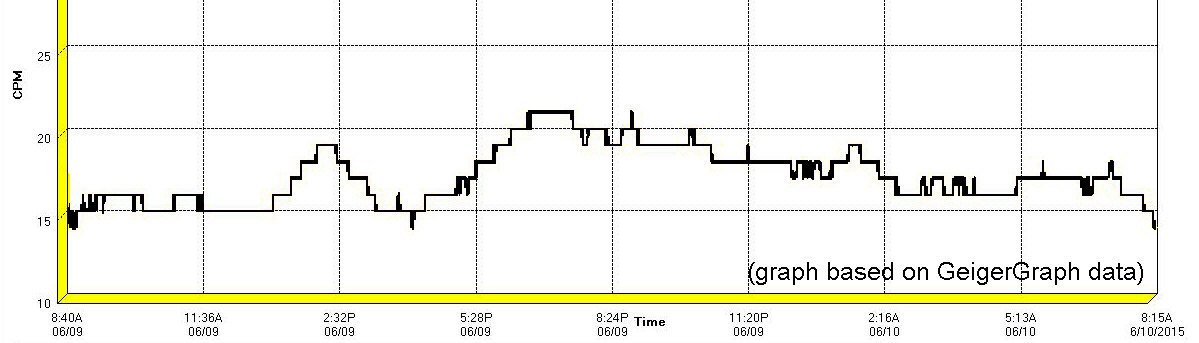

Hour-long averages on June 9th, 2015

(I'm guessing this is a rounded running average.)

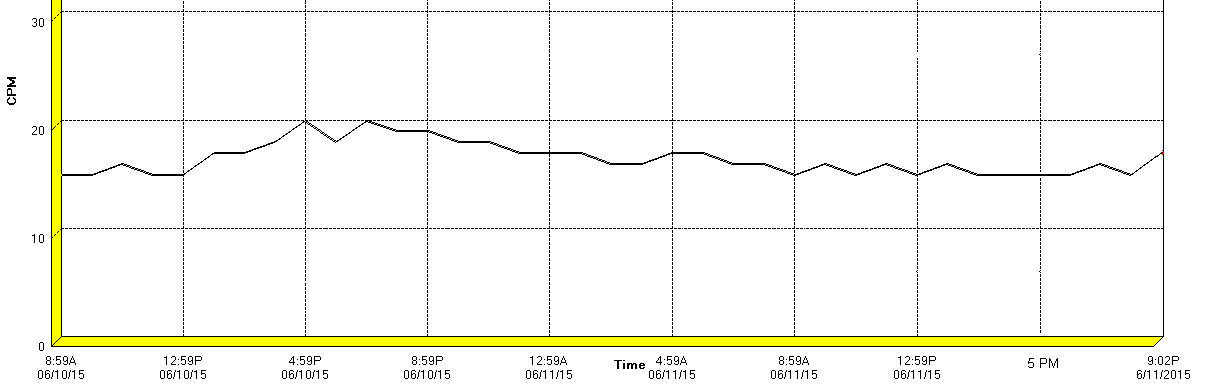

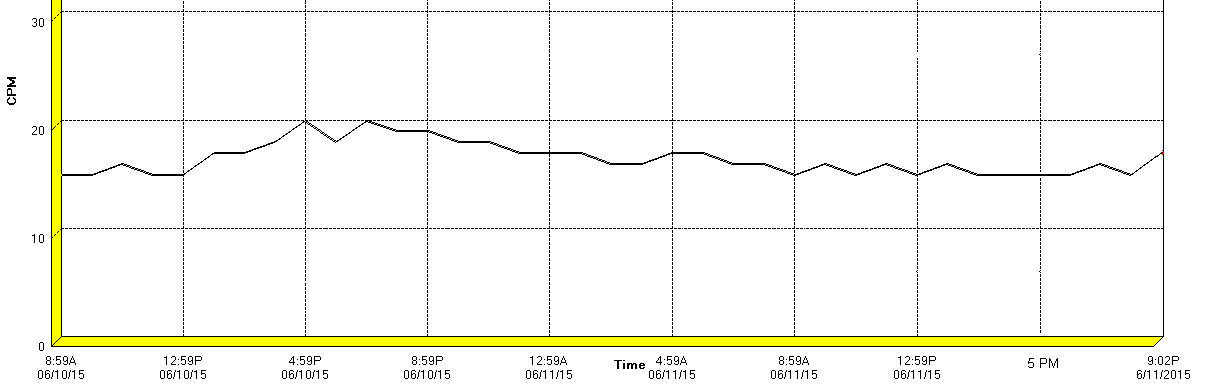

Hour-long averages on June 10th, 2015

(This appears to be an hour-by-hour straight average,

based on a different output of GeigerGraph data.)

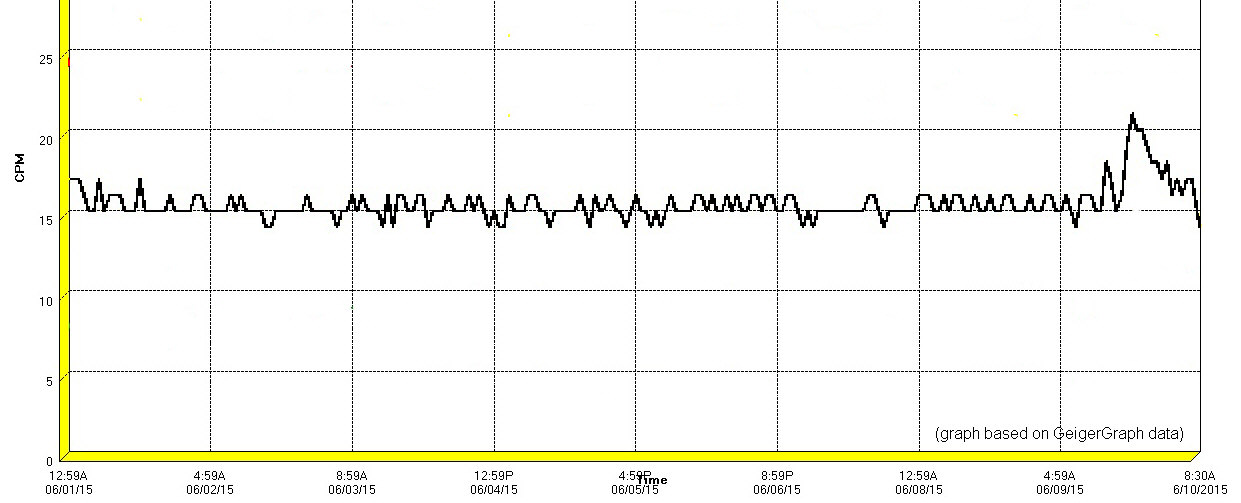

Hour-long Averages, June 1st through 9th, 2015

6/10c: The high count experience here seems to have

been isolated to my station. Judging by the period of hours that it appeared

to last, I speculated that I was picking up radiation from a neighbor who'd

been given a radioactive tracer injection (ie: "nuclear medicine"), although

I have no confirmation of that.

6/11: * Okay --I'm stumped. Assuming this

is about a neighbor who's been given a medical dose of a radioactive tracer

substance, a second day of high CPMs suggests that it can't have been 6

hour half lived technetium-99m (which is rapidly eliminated from the body).

Possibly, the tracer was iodine-131, but for all I know, my elevated CPMs

were caused by something else altogether.

* I compared my regular Geiger counter to one which use

to match its counts fairly well (long term averages), and found it about

5% higher now. Since my graphs have otherwise been looking consistent,

I'm saying that the 2nd Geiger counter was 5% low --for some reason.

* I did a 4 loop "beach wander" today, since there's so

much in the (Ene) news about ocean contamination, but my readings were

normal --and I was listening to the beeps as I walked. (I saw the same

bands of --what I take to be-- small, dead, paper thin jelly fish --as

before.)

6/16: How to log and graph such a rough and casual

reading is a problem. Tentatively, I'll average the displayed range of

readings and "round up" to the nearest 10% --as looked at and informed

by the audible beeps.

Sorry: Save for an active period late in November, I got

discouraged and stopped graphing --until 2016, preserving only my logged

notes for unusual peaks/events (as follows). However, the logging program

I've been using (an old beta version of GeigerGraph-5) can be set to generate

an hourly averaged graph for that period (shifted about 8 hours

on 10/10/2015, when I went to UTC time) with overlap in June for comparison:

Sorry: Save for an active period late in November, I got

discouraged and stopped graphing --until 2016, preserving only my logged

notes for unusual peaks/events (as follows). However, the logging program

I've been using (an old beta version of GeigerGraph-5) can be set to generate

an hourly averaged graph for that period (shifted about 8 hours

on 10/10/2015, when I went to UTC time) with overlap in June for comparison:

--which you'll have to Ctrl+ to enlarge the images

and read the legends. (PDT time)

That period of interest in June (PDT time).

July - 2015: * There were isolated (ie: no preceding

build-ups nor follow-on decays) one minute long peaks on the 4th (33cpm),

the 8th (34cpm), 25th (33cpm), but the days of July otherwise looked normal.

August - 2015: * There were isolated 33cpm

(minute-long) peaks on August 21st, 23rd, 26th, and 28th. We logged isolated

34cpm peaks on the 22nd and the 26th as well. Those peaks were statistically

unsurprising and the rest of August has been within our normal

range.

(I'll try

to make my reporting more uniform.)

September - 2015: * We logged peaks as follows:

~33cpm at 08:37 hours (all PST)

on 9/7

~33cpm at 20:22 hours on 9/9

~34cpm at 07:20 hours on 912

~33cpm at 00:22 hours on 9/16 (The

10 minute running avg hit my reporting threshold of 20cpm 00:30h)

~The 10 minute running avg hit

my reporting threshold of 20cpm on 9/20 at 12:00 hours

~34cpm at 11:25 hours on 9/22

~34cpm at 12:23 hours on 9/24 ,

and on the 20th at 12:00 hours.

~37cpm peak on 9/24 at 13:50 hours

--a

bit unusual

Other than those of the 24th, September's peaks were

isolated (ie: no preceding build-ups nor follow-on decays) and statistically

unsurprising. The rest of September was within our normal

range.

October - 2015: * I'm reading responsible

reports that cesium-137 is showing up in Alaskan waters.

* As of 10/10/2015 I standardized on Co-ordinated Universal

Time, or "UTC" --which is similar to GMT", but sans any DST changes. I

should have been doing this since March of 2011. (We need to don pirate

costumes and form a rabid, single issue political faction which is hell

bent on abolishing our absurd Daylight Saving Time clock changes --!--

Arrrrrr!)

* As of 10/31/2015, logging here has been within

our normal range, with isolated peaks (ie:

no preceding build-ups nor follow-on decays) as follows:

~33cpm peak at 17:30 hours on 10/2

~34cpm peak at 20:49 hours on 10/9

~34cpm peak at 09:33 hours on 10/10

(Switched to UTC time-dates)

~33cpm peak at 06:13 hours on 10/25

(10 minute avgs reached 20cpm 4 times --otherwise isolated.)

~33cpm peak at 00:19 hours on 10/26

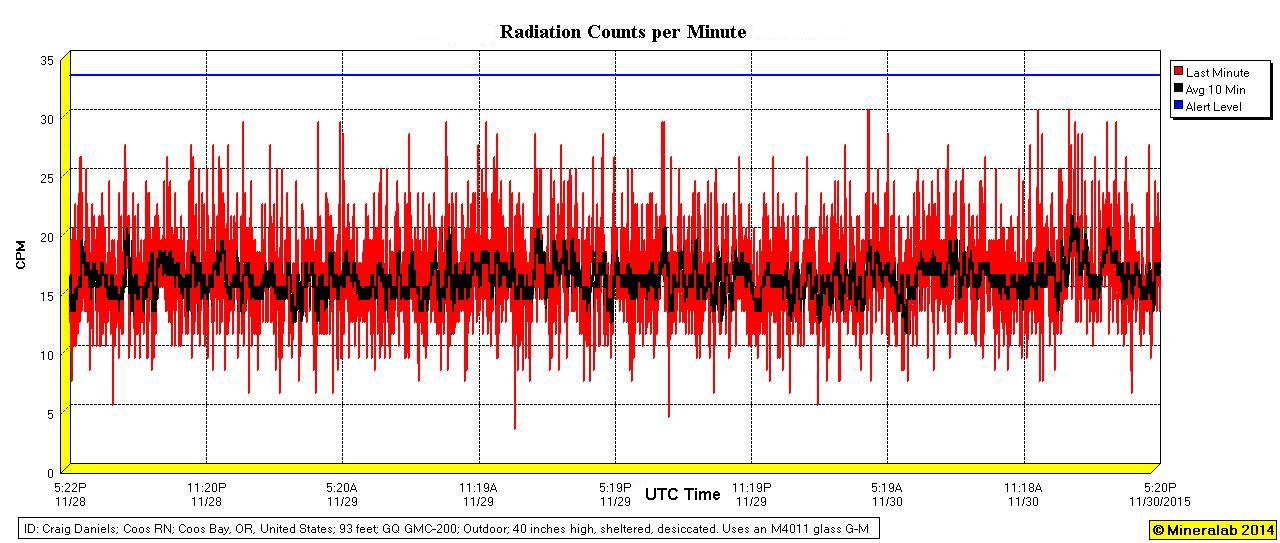

November - 2015: * As of 11/30/2015,

and except for a double-peaked 10 minute running average excursion on the

30th (to 22cpm, but 1 minute peaks did not reach 33cpm), logging here has

been within our normal range, with isolated peaks

(ie:

no preceding build-ups nor follow-on decays) as follows:

~The log for the day of 11/2 was

somehow lost.

~The 10 minute running average

reached my reporting threshold of 20cpm at 08:30 hours on 11/4.

~The day's 24 hour average was

15.64cpm on 11/10 (The 7-day average ending 1/31/2014 was 15.77)

~The 10 minute running average

reached 20cpm at about 01:39 hours on 11/15.

~The 10 minute running average

reached 20cpm at about 16:15 hours on 11/17.

~33cpm peak at 10:30 hours on 11/18

~On 11/20 I started saving images

of the daily graph (to the default directory for GG-5b).

~The 10 minute running average

reached 22cpm at about 17:00 hours on 11/20.

~35cpm peak at 10:46 on 11/22.

~The 10 minute running average

reached 20cpm at about 05:30 hours on 11/24.

~The 10 minute running average

reached 23cpm at about 20:00 hours on 11/24.

~The 10 minute running average

reached 20cpm at about 09:30 hours on 11/25.

~33cpm peak at 12:51 hours on 11/25.

~34cpm peak at 23:50 hours on 11/26.

~The 10 minute running average

touched 20cpm 3 times on 11/29.

~The 10 minute running average

touched 20cpm 3 times on 11/30 --once to 22cpm, but no 33cpm 1-min peaks.

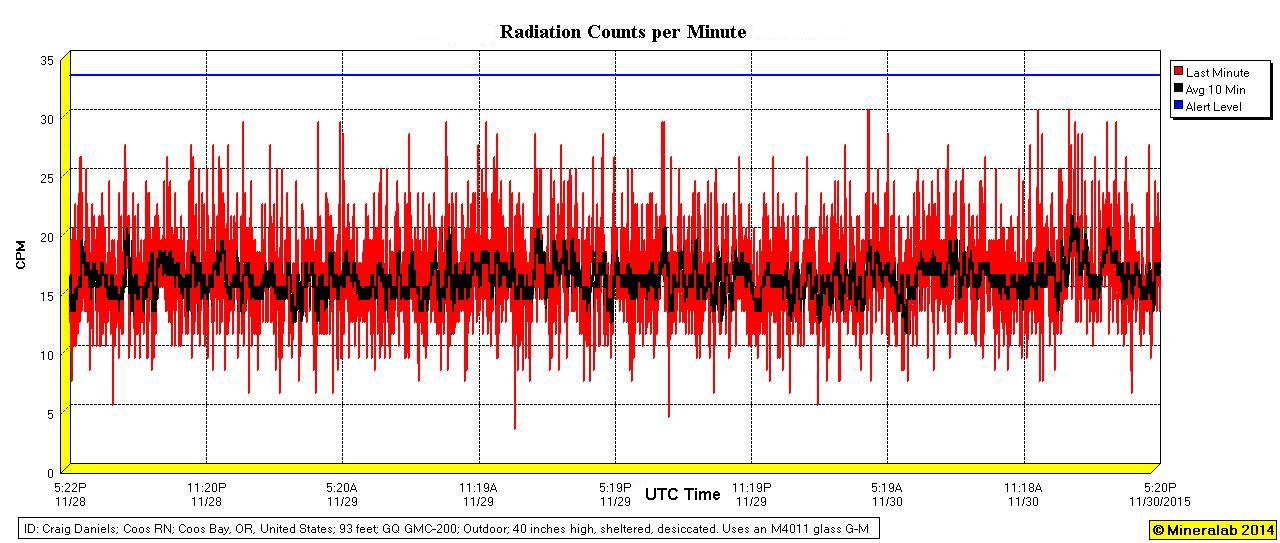

11/28-11/30/2015utc (Ctrl+ to enlarge the images.)

December - 2015: * As of January

1, 2016, logging here has been within our normal range,

with isolated peaks (ie: no preceding build-ups

nor follow-on decays) as follows:

~34cpm peak at 15:12 hours on 12/1.

~The 10 minute running average reached

20cpm at about 13:00 hours on the 4th.

~The 10 minute running average reached

20cpm at about 18:00 hours on the 6th.

~The 10 minute running average reached

22cpm at about 14:30 hours on the 8th.

~The 10 minute running average reached

21cpm at about 16:00 hours on the 10th.

~The 10 minute running average reached

20cpm at about 09:00 hours on the 11th.

~33cpm peak at 00:56 hours on 12/17.

~The 10 minute running average reached

20cpm at about 05:15 hours on the 18th.

~On 12/24, I started logging (but I'll

usually not post) the 24 hour average for each day. I'm also saving the

daily graphs. The first averages have been: 15.75, 15.12, 15.38, 15.33,

15.17, 15.44, 15.39, 15.20 (through 12/31). these average 15.35cpm, whereas

the last week of January, 2014 averaged 15.77 (with the same outside-inside

equipment and configuration).

~33cpm peak at 20:43 hours on 12/26.

January - 2016

* All times/dates UTC. As of January 19, logging here

has been within our normal range, with isolated peaks

(ie:

no preceding build-ups nor follow-on decays) as follows:

~4th: The 10 minute running average reached

20cpm at about 23:15 hours.

~5th: The 10 minute running average reached

20cpm at about 13:15 hours.

~Somehow, the program shut down on the

6th, so I lost the data for the 6th-7th period.

~7th: The 10 minute running average reached

20cpm at about 17:50 hours.

~7th: 33cpm peak at 18:10 hours.

~9th: The 10 minute running average reached

23cpm at about 18:17 hours.

~12th: The 10 minute running average

reached 20cpm at about 17:00 hours.

~15th: 33cpm peak at 01:44 hours.

~17th: 33cpm peak at 08:07 hours.

~18th: 33cpm peak at 05:09 hours.

~19th: 33cpm peak at 11:38 hours.

~No 4.4+ sigma peaks through to the 31st,

and the averages are running low.

* I'll continue to base percentages on year 2014 being

100% (15.77cpm).

* Why do the 1 and 10 minute averaged levels of 33-34cpm

and 20cpm repeat so often? This has to be some sort of an event with an

irregular but frequent recurrence. I checked the USGS "Real-time earthquake

map" (via the R-G Oregon Live Link) and tallied 9 small earthquakes spanning

the past 30 days --within a radius of about 130 miles (land and ocean).

None of them came even close to the UTC timed peaks on this page.

* Perhaps I can get some regional magnetometry to match

up.

* We've recently had a few frog drowning rainfalls,

but with no marked effect upon the gamma counts that I can see. Perhaps

if I compared rainfall rates to the rolling 10 minute

averages, I could see some match-ups.

February - 2016

* All times/dates UTC. As of February 17, logging here

has been within our normal range, with isolated peaks

(ie:

no preceding build-ups nor follow-on decays) as follows:

~2nd: The 10 minute running average reached

21cpm at about 05:00 hours.

~2nd: 32cpm peak at 21:07 hours, noted

because it was such a stand-out peak (although less than 33cpm).

~3rd: The 10 minute running average reached

20cpm at about 19:30 hours.

~I stopped saving daily graphs as of

the 3rd.

~9th: The 10 minute running average reached

20cpm at about 20:15 hours.

~15th: 33cpm peak at 06:29 hours.

~The daily average fell to 94.6% on the

16th, so I decided to do a graph for the month of February as well --and

to corroborate January's low average. Past graphs have dipped as low as

91%, however. There's been one power failure (a broken wire) and time will

tell if this is about the (sealed in with the Geiger counter) backup battery

running down, but I don't think so.

~17th: Noted: an abrupt increase of 5.2%

in the daily average.

~18th: The 10 minute running average

reached 20cpm at about 15:15 hours.

~21st: The 10 minute running average

reached 20cpm at about 14:15 hours.

~23rd: The 10 minute running average

reached 20cpm at about 07:00 hours.

~Oddly, that 10 minute average "ceiling"

pretty much continues.

~No other notable excursions through

the end of this month.

~The last 7 days of February averaged

97.3% --a tad higher than the last 7 days of January.

March - 2016:

* As of 4/1/2016, logging here has often been below

our normal range, with isolated peaks (ie:

no preceding build-ups nor follow-on decays) as follows:

~ 5th: The 10 minute running average

reached 20cpm at about 22:10 hours.

~ 5th: 33cpm peak at 21:58 hours. (A

brief decay, but no build up. Saved the graph.)

~ 7th: Found the logging program stopped.

Rebooted the OS.

~12th: The 10 minute running average reached

20cpm at about 12:15 hours.

~19th: 36cpm peak at 04:23 hours. (No build

up, brief 10m avg plateau at 18cpm.)

~20th: The 10 minute running average reached

20cpm at about 06:00 hours.

~21st: The 10 minute running average reached

20cpm at about 12:00 hours. (isolated)

~21st: The 10 minute running average reached

20cpm at about 18:30 hours. (isolated)

~22nd: 33cpm peak at 03:04 hours. (The average

over the past 3 days: 15.51 = 98.35%)

~23rd: This day's average fell to 14.98cpm

= 95% --the 3rd such low this year.

~24th: Only got the last 12 hours of this period

(forgot to restart the logging program in the morning).

~29th: The 10 minute running average reached

20cpm at about 15:45 hours.

~30th: The 10 minute running average reached

20cpm at about 10:50 hours. (isolated peak)

~30th: The 10 minute running average reached

21cpm at about 11:20 hours. (isolated peak)

~31st: The last 7 days averaged 96.8% of this

station's 2014 baseline (100% = 15.77cpm).

April - 2016:

* The following comments are based on whole counts.

* As of 5/1/2016, my counts have been trending back

up toward our

normal range, with isolated peaks (ie:

no preceding build-ups nor follow-on decays) as follows:

~ 2nd: The 10 minute running average

reached 20cpm at about 04:30 hours.

~ 7th - 8th: I found the GG-5 logging

program off. Rebooted the operating system.

~10th: 32cpm peak at 14:30 hours. (From

graph only. GG-5's SS overwrote data after the 3rd day.)

~14th: The 10 minute running average reached

20cpm at about 06:40 hours.

~15th: 32cpm peak at 01:46 hours.

~17th: 32cpm peak at 00:42 hours, and again

at 02:30 hours.

~22nd: The 10 minute running average reached

20cpm at about 16:15 hours.

(I don't know why the 10 minute count is so often 20cpm.)

~30th: The rest of April was "low" to "average"

with no one or ten minute peak reaching levels of note.

May - 2016

A comparison of straight counts logging to my (brief)

"minus noise" logging.

* As of 6/1/2016, my counts have been trending back

up toward our

normal range, with peaks (one

of which appeared to be a build-up and decay) as follows:

~ 1st: 33cpm peak at 20:24 hours (UTC).

~ 3rd: The 10 minute running average

reached 20cpm at about 10:00 hours.

~ 6th: 32cpm peak at 16:30 hours.

~ 8th: The 10 minute running average

reached 21cpm at about 00:30 hours.

~ 8th: 33cpm peak at 03:52 hours.

~10th: The 10 minute running average reached

20cpm at about 15:00 hours.

~10th: 33cpm peak at 22:17 hours.

~11th: 32cpm peak at about 10:45 hours.

~13th: 34cpm peak at 06:06 hours.

~13th: The 10 minute running average reached

20cpm at about 13:15 hours.

~14th: 32cpm peak at 11:17 hours.

~14th: Another 32cpm peak at about 14:30 hours.

~17th: The 10 minute running average reached

20cpm at about 12:45 hours.

~20th: Found my logging program stopped and

data lost for the day. Rebooted OS.

~21st: 32cpm peak at 19:25 hours.

~21st: The 10 minute running average reached

20cpm at about 19:40 hours (almost a decay from that 32 peak,

but looks more like a coincidental stand-alone). Saved graph and SS.

~23rd: The 10 minute running average reached

20cpm at about 06:45 hours.

~24th: 33cpm peak at 06:22 hours.

~25th: 32cpm peak at 13:20 hours. (Saved SS

& graph.

Submersion tests today.)

~28th: A 33cpm peak at 15:24 hours, surrounded

by a brief build-up and decay --which amounted to a 10 minute

running average peak of 21cpm at about the same time. Although these peaks,

alone, are barely

remarkable, a "build-up and decay" is unusual enough to take note of (if

not graph).

~30th: The 10 minute running average reached

20cpm at about 22:05 hours. (Today's average reached 100.1%)

~31st: The 10 minute running average reached

20cpm at about 08:15 hours. (Today's average reached 102.4%. )

June - 2016

(Apologies: This graph evaporated for a few days.)

* My notices of higher peaks were growing rather lengthy

--now that "background" radiation counts are returning to the 2014 baseline

average (15.77 = 100%), I've arbitrarily raised the bar --to a whole count

of 22cpm for 10 minute running averages (140% of baseline), and to

a one minute count of 33 (209% of baseline).

* G-M tube mid-level energy gamma efficiency might

only be about 2%, but G-M tubes will continue to count up into Cosmic energy

levels (well above scintillator levels) so G-M tubes might have good response

to muons (flux ~ 1/cm^2/minute, they carry charge & are some 200x the

mass of an electron, so the shielded noise count seems about right, given

the profile of an LND-7317 G-M tube). An argument against: they're relativistically

fast enough to only be perceptually present --and don't hang around long

enough to ionize G-M tube gas. (Muons collide with and get amplified by

lead shielding due to secondary splatters --to the extent that counts can

go up with over 100mm of shielding.)

~ 9th: The 24 hour run ending today averaged

101.8 percent.

~10th: 33cpm peak at 12:32 hours (UTC).

~11th: The 24 hour run ending today averaged

101.8 percent.

~18th: A 33cpm peak at 06:50 hours.

~18th: A 33cpm peak at 07:17 hours.

~22nd: Found my logging program stopped and

data lost for the day. Rebooted OS. Due to the increasing

frequency of my logging program crashes, I'm scheduling OS reboots every

8 days now.

~30th: Above times UCT/GMT. No further threshold crossing

peaks to report, as of this (PST) morning.

July - 2016

~ 2nd: 34cpm peak at 10:15 hours (UTC). (Isolated)

~ 2nd: 35cpm peak at 14:43 hours. (Isolated)

~15th: 35cpm peak at 02:45

hours. (Isolated, but saved automatic day graph anyway.)

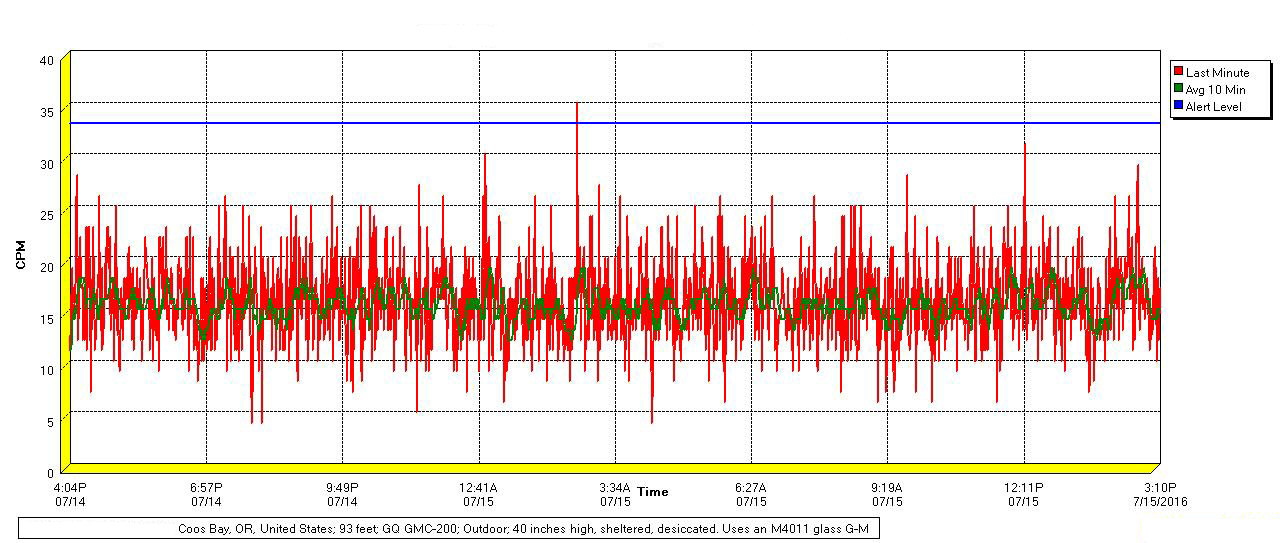

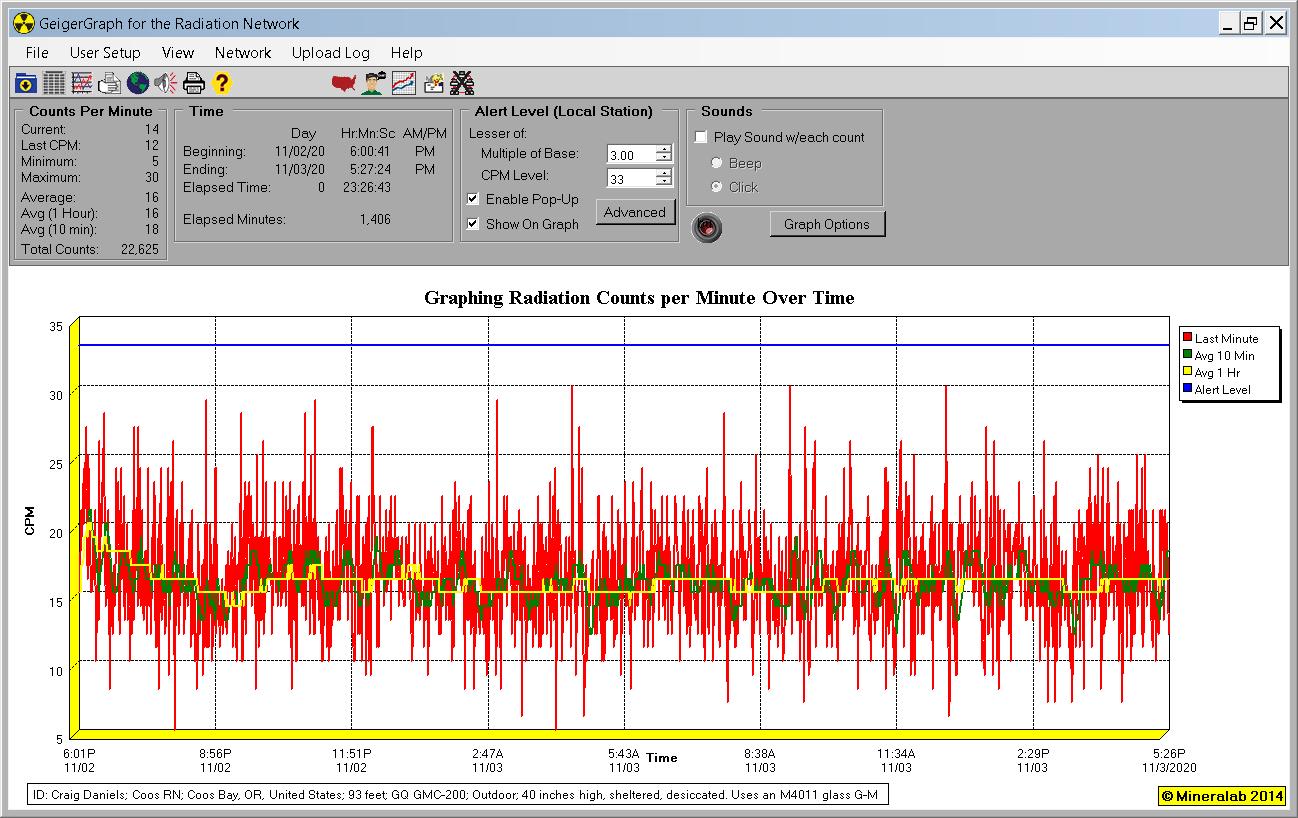

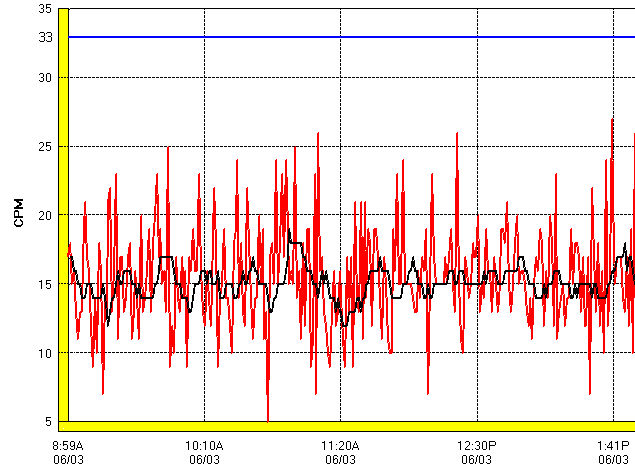

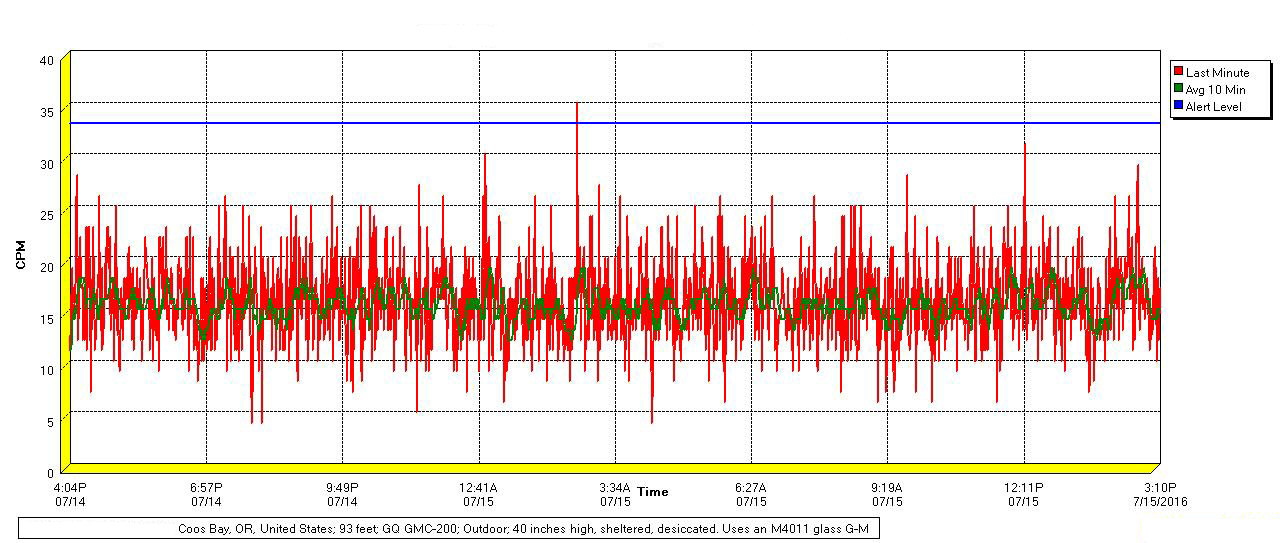

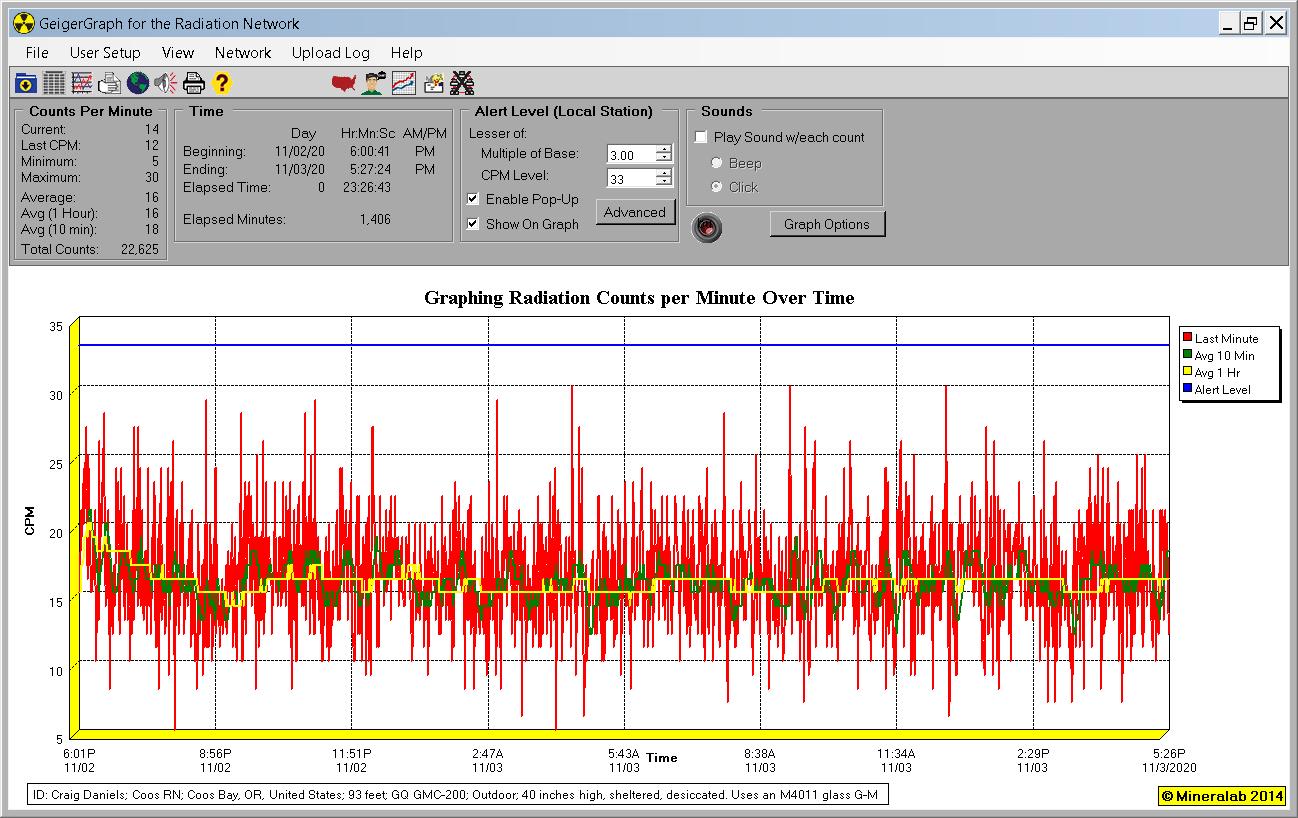

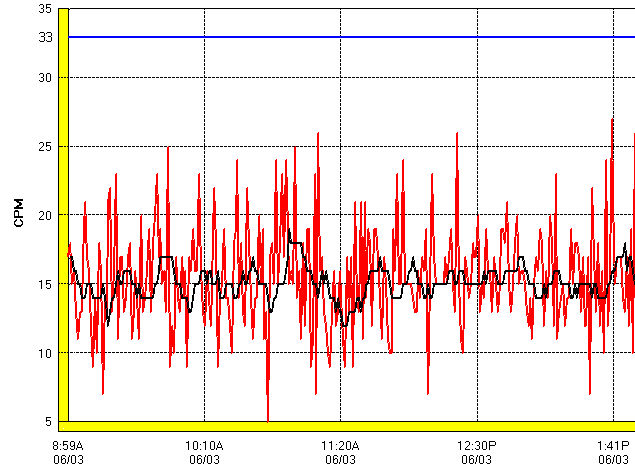

This is a typical graph, probably including that 35cpm

spike plus all the lesser ones. Catching and

counting gamma rays is similar to counting people

passing through the gate at your county fair --per

minute. Sometimes a bunch goes through, sometimes

hardly anyone, and you can expect a distribution

of counts over time (a "Poisson distribution") that's

similar to this graph. I'm going by results now (like: "how

often do I get a given peak?", rather than calculating

so many "standard deviations" from a long term average --and

by whether the graph appears to show a build up to

a bit of a high plateau --rather than throwing a one-off "isolated" peak.

~21st: Lost data, so only the late night and early

morning 10 hours, which I "corrected" by +2.1%, based on a

recent day-to-night averaging comparison.

~22nd: 33cpm peak at 05:16 hours (UTC). (Isolated)

~31st: Above times UCT/GMT. No further threshold crossing

peaks to report, as of this (PST) morning.

August - 2016

~ 8th: 33cpm peak at 09:50 hours (UTC). (Isolated)

~ 9th: 34cpm peak at 04:12 hours. (Isolated)

~ 9th: 33cpm peak at 19:24 hours. (Isolated)

~ 14th: Was only able to monitor and average the last

11 hours of this 24 hour period.

~ 18th: 34cpm peak at 11:59 hours. (Isolated)

~ 20th: 33cpm peak at 03:35 hours and another 33cpm

peak at about 09:00 hours. (Both isolated)

~ 24th: 34cpm peak at 19:23 hours. (Isolated.) Noted:

similarly timed peak on the 9th. High 102.7% average.

~ 25th: 33cpm peak at 23:20 hours. (Isolated.)

~ Note: single, one minute peaks are accurately timed

(UTC), while subsequent peaks or high 10 minute averages must be estimated

from their positions on GeigerGraph

plots (which have peculiar time intervals).

~ 31st: Above times UCT/GMT. No further threshold

crossing peaks to report, as of this (PST) morning.

September - 2016

~ 1st: 33cpm peak at 09:50 hours (UTC). (Isolated)

~ 28th: 34cpm peak at 07:06 hours. (Isolated)

~ 30th: Above times UCT/GMT. No further

threshold crossing peaks to report, as of this (PST) morning.

October - 2016

~ 2nd: 35cpm peak at 03:58 hours (UTC). (Isolated)

~ 3rd: 33cpm peak at 12:16 hours. (Isolated)

~ 11th: 35cpm peak at 07:16 hours. (Quite isolated

--from a very quiet looking graph (saved).)

~ 12th: 33cpm peak at 13:01 hours. (Isolated, but

my 24 hour average is up. Saved graph and spreadsheet.)

~ 15th: Stormy weather, but doesn't appear to affect

the graph (nor in years past records).

~ 27th: 33cpm peak at 22:11 hours. (Isolated)

~ 31st: Above times UCT/GMT. No further threshold

crossing peaks to report, as of this (PST) morning.

November - 2016

~ 6th: 33cpm peak at 20:06 hours (UTC). (Isolated)

~ 18th: 33cpm peak at 14:49 hours. (Isolated)

~ 21st: 33cpm peak at 10:03 hours. (Isolated)

~ 23rd: 34cpm peak at 16:23 hours. (Isolated)

~ 30th: Times are UCT/GMT. No further threshold crossing

peaks to report, as of this (PST) morning.

December - 2016

~ 3rd: 37cpm peak at 13:50 hours (UTC). (Isolated)

~ 9th: 37cpm peak at 16:17 hours. (Isolated)

~ 11th: 33cpm peak at 10:37 hours. (Isolated)

~ 12th: 35cpm peak at 11:52 hours. (Isolated)

~ 14th: 34cpm peak at 01:50 hours. (Isolated)

~ 15th: The past 24 hour average reached 104.1% this

morning. Otherwise, the daily graph looks normal.

~ 19th: A run-up and then down --as noted.

~ January 1st: Times are UCT/GMT. No further threshold

crossing peaks as of this morning.

~~~~~~~~~~~~~~~~

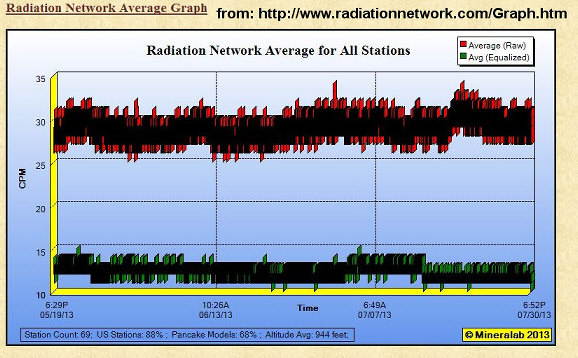

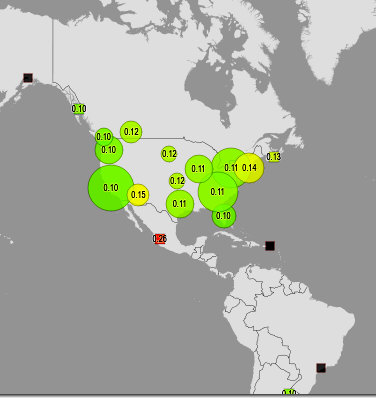

A comparison of my monitoring in Oregon, USA with

Peter Daley's in Australia:

* That mid-month rough correspondence suggests that we

might be looking at a commonality of cosmic radiation,

* That mid-month rough correspondence suggests that we

might be looking at a commonality of cosmic radiation,

perhaps with influence from the recent solar winds

--plus overlays of more local phenomena and "noise".

* One gets the impression that there was some kind

of an event spanning the 17th through the 19th. / The solar notations are

from my readings/interpretations of Dr. Tony Phillips' daily descriptions

at Spaceweather.com.

* Some are of the opinion that solar winds and other

activity "sweep away" a lot of the primary cosmic rays (from deep space),

the secondaries of which (mostly muons near sea level) make up half or

more of the "background radiation" that Geiger counter type monitoring

stations tally up.

* If I get another distinctive looking graph, I'll

compare data again.

January - 2017

~ 5th: Don't know what to make of that saw

tooth. We had 4.5 inches of fluffy new snow on the 4th.

~ 31st: No threshold crossing peaks as of this morning.

There was only one sun spot this past month (that I recall)

but a lot of solar wind --for which you can check the record at: Space

Weather

February - 2017

~ 3rd: 33cpm peak at 10:00 hours (UTC). (Isolated)

~ 5th: 33cpm peak at 18:16 hours. (Isolated)

~ 21st: 33cpm peak at 09:45 hours. (Isolated)

~ 24th: 34cpm peak at 08:08 hours. (Isolated)

~ 24th: 33cpm peak at 09:00 hours (+/-). (Isolated)

~ March 1st: No other threshold crossing peaks as

of this morning.

March - 2017

~ For months there's been too much recent rainfall to

do a beach walk reading (cesium being water soluble).

~ March 31st: No threshold crossing peaks as of this

morning.

April - 2017

~ 7th: 34cpm peak at 18:59 hours (UTC). (Isolated)

~ 30th: No other threshold crossing peaks as of this

morning (PST/PDT).

May - 2017

~ 25th: 34cpm peak at 08:26 hours (UTC). (Isolated)

~ 31st: No other threshold crossing peaks as

of this morning (PST/PDT).

June - 2017

~ 8th: The day's graph looked normal --save that

the average is somewhat high and the above graph looked like a build-up.

(We hit 104% six plus months ago.)

~30th: No threshold (33cpm) crossing peaks as of this

morning (PST). We've had five 32cpm peaks this month, all of them isolated

--meaning no build-ups and they looked like the high end of statistical

noise. However: that there've been no 33cpm peaks is statistically significant.

High altitude counts (see Space

Weather) show a marked increase of 10 to 15%, which is attributed to

our Sun having entered a quiet period --the solar wind being less able

to sweep away incoming cosmic primary rays. Peter Daly's Australia

based station has seen just this much of an increase, but my station

cleaves doggedly near its long term average of 15.61cpm (= "100%" and approximately

an indicated "0.10 uSv" [on a Geiger counter so calibrated in otherwise

meaningless Cs-137 gamma "dose" units]).

July - 2017

~ 1st: 33cpm peak at 21:34 hours (UTC,

isolated).

~ 2nd: 33cpm peak at 00:05 hours (isolated).

~ 7th: 35cpm peak at 08:27 hours

(isolated).

~ 14th: 33cpm peak at 14:39 hours (isolated).

~ 26th: 33cpm peak at 14:23 hours (isolated).

~ 29th: 34cpm peak at 13:41 hours (isolated).

~ 30th: 33cpm peak at 13:33 hours (isolated).

~ 31st: No other threshold crossing peaks as

of this morning (PST/PDT).

August - 2017

~ 5th: 36cpm peak at approximately 05:30

hours (UTC, isolated).

~ 6th: 34cpm peak at 18:06 hours

(isolated).

~ 10th: 34cpm peak at 01:28 hours (isolated).

~ 11th: Spaced out --doing astronomy projects.

Let my Geiger counting go for 2 mornings. I've missed no peaks.

~ 13th: 36cpm peak at 18:16 hours (isolated).

~ 15th: 32cpm --below threshold, but looks like

a build-up of peaks at about 21:18 hours.

~ 16th: 33cpm peak at about (isolated). The

24 hour average reached 103% this morning (PST/PDT).

~ 17th: 34cpm peak at 13:09 hours. No avg build-up,

but there was a brief "build-up" of peaks (saved the graph).

~ 22nd: 38cpm peak at 13:55 hours --isolated? Minute-by-minute

peaks: 17, 20, 20, 38, 20, 16. Hmmmnn.

~ 23rd: This graph and report posted a day early on

account of the recent eclipse (morning of the 21st, PST/PDT).

~ 28th: 33cpm peak at 12:05 hours with sort

of a following decay.

* The 24 hour average is "way" up, which is only 6.2% above the station's

baseline average, but that's the highest since I started 24 hour averaging

in January of 2014.

* It's probably due to all the smoke in the air from the fierce forest

fires burning in Oregon. The smoke particles could be collecting radon.

The fires could also be releasing long-lived radionuclides (Cs-137?) which

were captured as the trees grew.

* Another possibility: during the eclipse (morning of the 21st) I noticed

significant sunspots. Today the Sun looks clear (at 8 power), and the day's

Space

Weather indicates that the solar wind is low, but is due to return

about August 31st. (The solar wind helps repel primary cosmic rays. Check

with Space Weather for more details.)

~ 31st: No other threshold crossing peaks as

of this morning (PST/PDT). The 1, 10, 60 minute graphs and the above 24

hour graph are normal.

September - 2017

~ 5th: 34cpm peak at approximately 16:43

hours (UTC, isolated).

~ 7th: Recent X-9 solar flare. A

CME might hit us on the 8th.

~ 9th: Two days averaged together

--no data lost, no more threshold crossing peaks as of this morning (PST/PDT).

~ 10th: I saw no indication of either the September

7th CME (arrived on the 10th, producing a "Forbush

minimum" at south pole) or the September 10th X class flare. See Space

Weather for more.

~ 11th: 33cpm peak at 14:31 hours (isolated).

~ 20th: 37cpm peak at 19:32 hours. Isolated,

but several curious, somewhat periodic, lesser peaks across graph.

~ 23rd: 39cpm peak at 13:19 hours. Very isolated

(12, 20, 39, 17, 21, 10).

~ 30th: 36cpm peak at 12:29 hours (UTC, isolated).

~ 30th: No other threshold crossing peaks as

of this morning (PST/PDT).

October - 2017

~ 4th: 33cpm peak at 10:40 hours (UTC,

isolated).

~ 4th: 33cpm peak at 23:17 hours

(isolated).

~ 8th: 34cpm peak at 03:18 hours

(isolated).

~ 10th: 35cpm peak at 08:30 hours (isolated).

~ 16th: 36cpm peak at 10:14 hours. Isolated

(no build up/down). Followed by 2 descending peaks (saved screen).

~ 25th: 33cpm peak at 12:57 hours (isolated).

~ 28th: 36cpm peak at 21:05 hours (isolated).

~ 31st: No other threshold crossing peaks as

of this morning (PST/PDT).

November - 2017

~ 7th: 35cpm peak at 09:10 hours (isolated).

~ 13th: 38cpm peak at 01:04 hours (isolated).

~ 13th: 33cpm peak at about 16:00 hours (isolated).

~ 18th: 33cpm peak at 18:06 hours (isolated).

~ 29th: 33cpm peak at 04:12 hours (isolated).

~ 30th: No other threshold crossing peaks as

of this morning (PST).

December - 2017

~ 13th: 33cpm peak at 17:38 hours (isolated).

~ 14th: 35cpm peak at 04:57 hours (isolated).

~ 15th: 33cpm peak at 11:22 hours (isolated).

~ 16th: 35cpm peak at 11:16 hours (isolated).

~ 25th: 35cpm peak at 08:39 hours (isolated).

~ 31st: No other threshold crossing peaks

as of this morning (PST).

January - 2018

~ 1st: 37cpm peak at 15:16

hours (always UCT) (isolated).

~ 2nd: 33cpm peak at 21:04 hours

(isolated).

~ 8th: No other threshold

crossing peaks as of this morning (PST).

~ 8th: 34cpm peak at 21:03

hours (isolated).

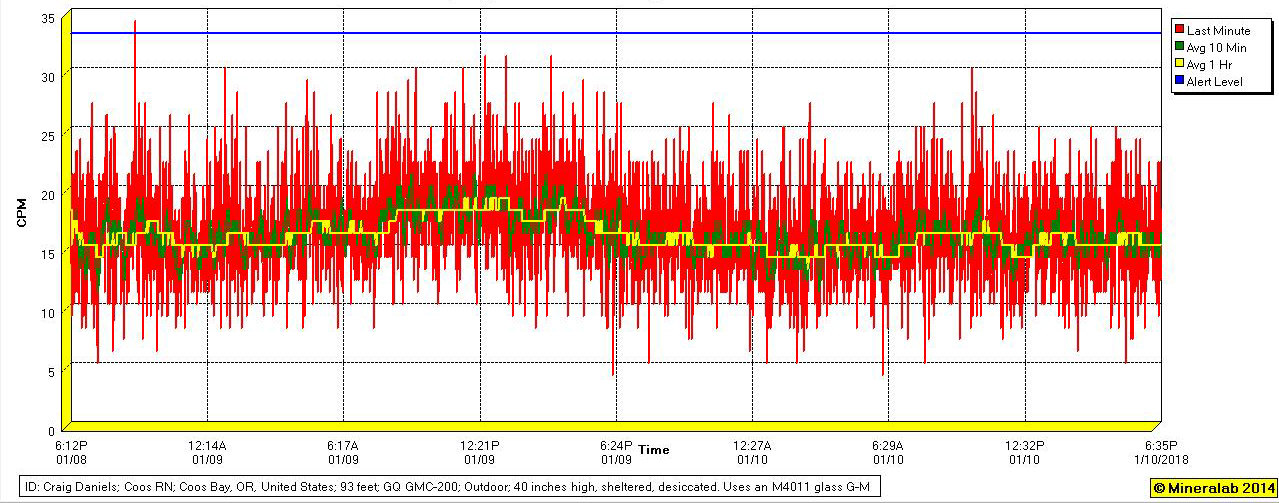

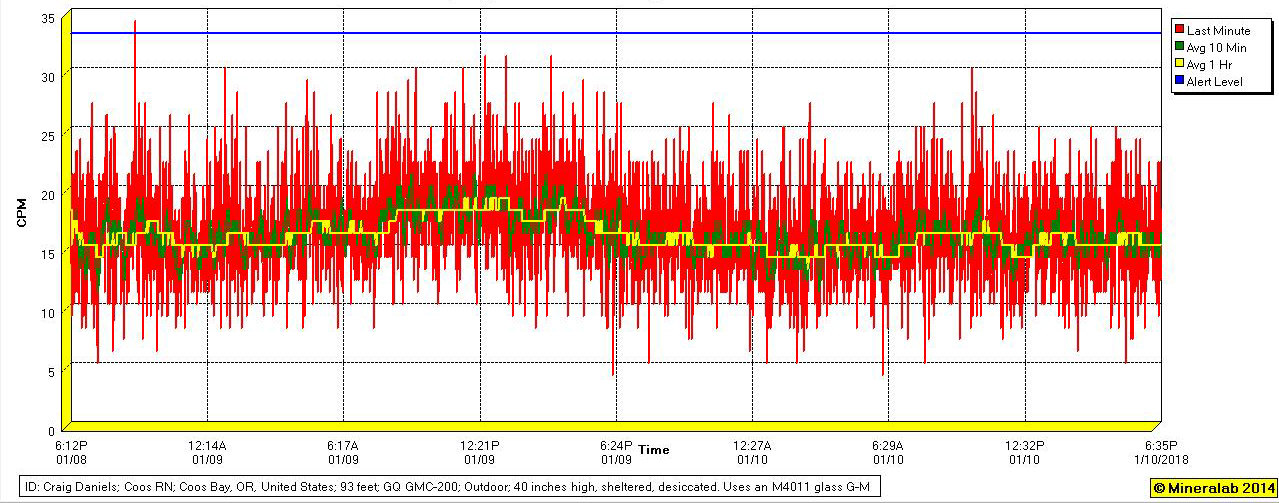

~ 9th: No threshold crossing

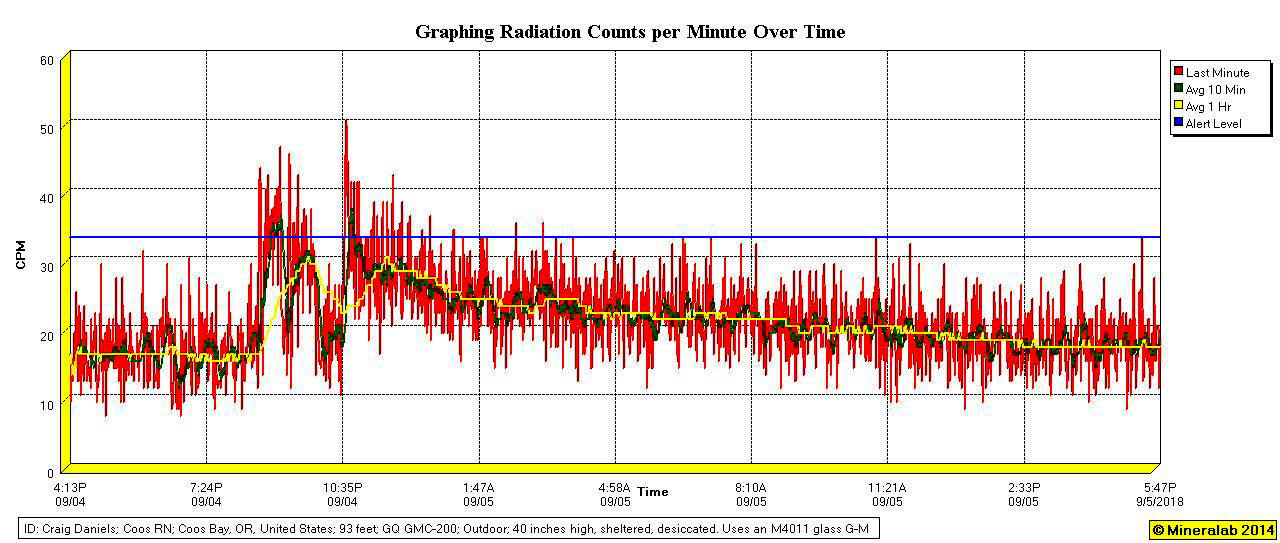

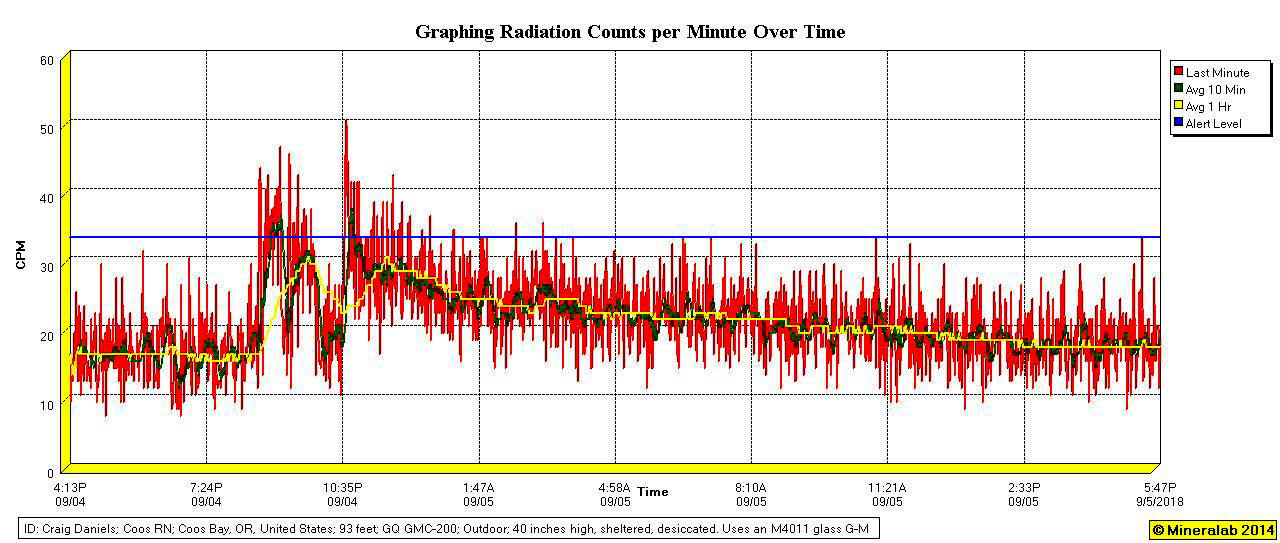

peaks today, but between 08:00 and 16:00 hours (UTC) the average hit 117.8%!

I'm not aware of any special reason for it. Here's a 2 day graph (Ctrl+

to enlarge the images.):

--for which 15.61cpm equals "100%" (my station's 2-week baseline average

4 years ago). My steep roof sheltered, sealed, one meter above the ground,

outdoor sensor only responds to gamma type and very hard beta radiation.

For all I know, this elevated count period could be cosmic ("muons") in

origin, or the 8 hour sleep period (midnight to 8am here) of a neighbor

with a radioactive tracer in her blood.

--for which 15.61cpm equals "100%" (my station's 2-week baseline average

4 years ago). My steep roof sheltered, sealed, one meter above the ground,

outdoor sensor only responds to gamma type and very hard beta radiation.

For all I know, this elevated count period could be cosmic ("muons") in

origin, or the 8 hour sleep period (midnight to 8am here) of a neighbor

with a radioactive tracer in her blood.

~ 14th: 36cpm peak at 06:13 hours (isolated).

~ 25th: 33cpm peak at 11:12 hours (isolated).

~ 31st: No other threshold crossing peaks

as of this morning (PST).

February - 2018

Unusually high 10 & 60 minute averages for February 18th-19th

(Ctrl+ to enlarge the images.)

~ 22nd: 35cpm peak at 17:35 hours (isolated).

~ 28th: No other threshold (33cpm) crossing

peaks as of this morning (PST).

March - 2018

~ 12th: 34cpm peak at 17:13 hours (isolated).

~ 13th: 35cpm peak at 07:05 hours (isolated).

~ 17th: 33cpm peak at 10:25 hours (isolated).

~ 21st: 35cpm peak at 15:23 hours (isolated).

~ 23rd: 35cpm peak at 16:01 hours (isolated).

~ 24th: Normal looking graph today.

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

April - 2018

~ 4th: 34cpm peak at 22:45 hours UTC (isoated).

~ 15th: 35cpm peak at 05:17 hours (isolated).

~ 17th: 37cpm peak at 18:34 hours (isolated)

~ 24th: 34cpm peak at 08:13 hours (isolated)

~ 30th: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

May - 2018

~ 4th: 33cpm peak at 23:48 hours UTC (isolated).

~ 14th: 34cpm peak at 13:34 hours UTC (isolated).

~ 21st: 34cpm peak at 07:48 hours UTC (isolated).

~ 24th: 33cpm peak at 12:16 hours UTC (isolated).

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

June - 2018

~ 6th: 33cpm peak at 10:52 hours UTC (isolated).

~ 7th: 35cpm peak at 10:51 hours UTC (isolated).

~ 9th: Posted this 24 hour average up-tick,

following a long calm period.

(Saved the normal looking 24 hour graph. Highest peak was 31cpm.)

~ 30th: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

July - 2018

~ 7th: 33cpm peak at 02:11 hours UTC (isolated)

~ 7th: 33cpm peak at 18:09 hours UTC (isolated)

~ 12th: through 18th: Monitoring has been continuous

and no peaks have been missed, but I averaged over

periods of 2 days and then 3 days --due to distractions and my increasing

forgetfulness.

~ 21st: 35cpm peak at 21:28 hours UTC (isolated)

~ 31st: 33cpm peak at 10:21 hours UTC (isolated)

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

August - 2018

~ 2nd: 33cpm peak at 04:01 hours UTC (isolated)

~ 2nd: 33cpm peak at 23:56 hours UTC (isolated)

~ 9th through to the

12th: * This gap was due to my having been injected with the radioactive

tracer technetium-99m, for the procedure: "myocardial perfusion imaging",

which (by calculating backwards from my later radiation counts) initially

rendered me something like 15 times higher than background --11 feet distant.

(Six hours later, with me seated 11 feet from my outside monitoring station,

it was tallying 7.4x background. Due to the isotope being distributed throughout

my body, the "inverse square law" doesn't apply at close range, but at

roughly 3 hours, the count hit 1800cpm [115x background] when I was standing

near the sensor's housing.)

* That said, there are "tens of millions" such procedures

performed each year (see Wikipedia's Tc-99m articles) with an over-all

casualty rate of 1 cancer or leukemia per 1000 patients. Technetium-99m

emits (some sources say "only") gamma at 0.142 MeV (142 KeV), plus (other

sources say) loses 12% of its mass via beta decay at a similar low energy.

The injected 99m pretty much decays into plain technetium-99 in a day or

two, which in turn decays by way of low energy beta (electron) emission

--but that takes 211,000 years, so its dose rate^

is pretty low --plus: the biological half-life for both 99m and 99 is only

24 hours.

* And that said: The radiation I've sustained

from my particular procedure is (per the Wiki) the equivalent of 94 (2

view) chest X-rays (!!) --and my wife gets a second-hand exposure at 1/10th

of a chest X-ray, so I now have a (linear exposure theory extrapolated)

statistical 1 in 5000 lifetime risk of developing a solid cancer or leukemia

--but it's actually much less, since I'm already 75 years old and (statistically)

almost dead anyway --but maybe it's more --after a lifetime's accumulation

of such risk factors (radioactive and otherwise).

* I have to wonder about all the technetium-99 which

passes through the bodies of "tens of millions" of patients and out into

the environment.

^ Since the indicated dose rates

of Geiger counters are typically calibrated with a standard cesium-137

source (the intermediary decay product of Barium-137m is what actually

radiates), perhaps the 142 keV gamma rays of technetium-99m are a lot less

ionizing and less biologically impactful than are the 662 keV gamma rays/photons

of Cs-137. I believe we report dose units of micro-REMs (uR) and Sieverts

(uSv) to each other for convenience, since they're cross-Geiger-counter

comparable --even though most folks take the indicated "dose" seriously.

~ 13th: 33cpm peak at 01:30 hours UTC (isolated).

~ 16th: 34cpm peak at 00:17 hours UTC (isolated).

~ 20th: 33cpm peak at 15:11 hours UTC (isolated).

~ 20th: 33cpm peak at 21:19 hours UTC (isolated).

~ 25th: 33cpm peak at 19:22 hours UTC (isolated).

~ 27th: A G3 solar sourced magnetic storm today, but

to no apparent affect.

~ 30th: 33cpm peak at 01:15 hours UTC (isolated).

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

September - 2018

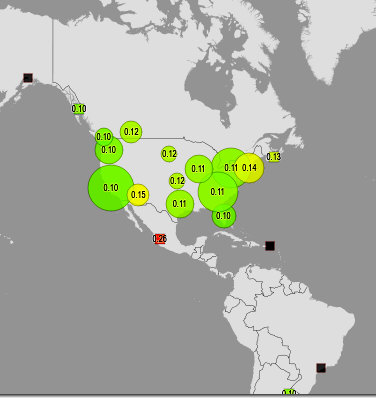

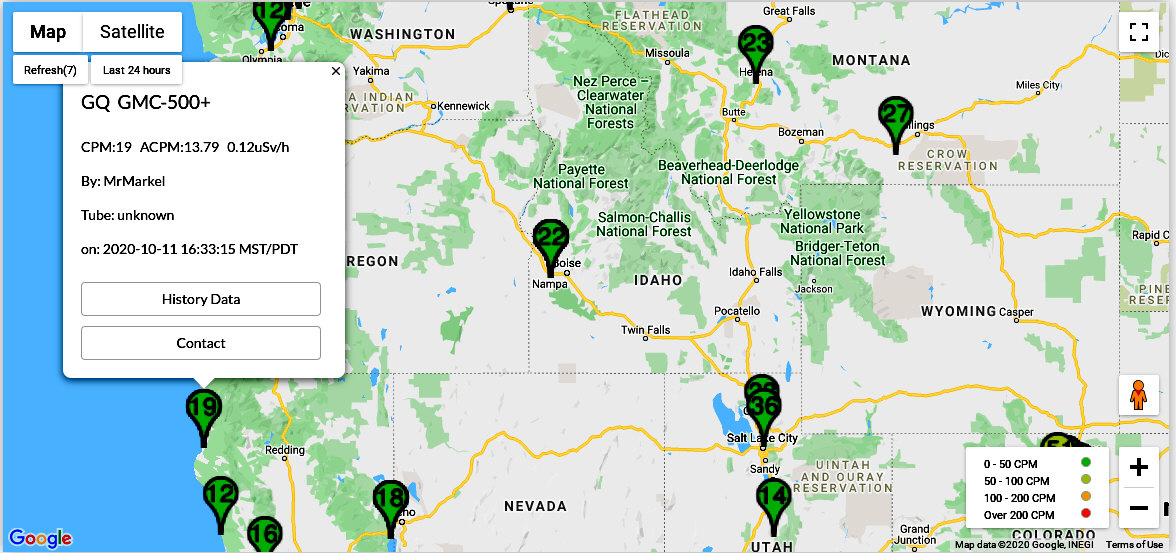

~ 5th: My outside station count did a whoop-dee-doo

at 20:38 hours UTC, hitting a 50cpm peak at about 22:40 hours. Judging

by the sudden rise and the rate of decay, this is about a Technetium-99m

dosed neighbor coming home from his/her appointment. I saw nothing special

to the north or south on the Radiation

Network map.

Sorry for the 12 hour UTC format --and for those time

base intervals. (Ctrl+ to enlarge the images.)

~ 8th: 33cpm peak at 06:08 hours UTC (isolated).

~ 13th: 33cpm peak at 21:21 hours UTC (isolated).

~ 16th: 36cpm peak at 22:23 hours UTC (isolated).

~ 18th: 33cpm peak at 06:43 hours UTC (isolated).

~ 25th: 34cpm peak at 02:43 hours UTC (isolated).

~ 29th: 33cpm peak at 20:29 hours UTC (isolated).

~ 30th: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

October - 2018

~ 5th: This is the middle of 3 days averaged.

(Events and obligations come up and I forget.) (75 years old.)

~ 6th: 37cpm peak at 11:01 hours UTC (Quite

isolated --to a single minute).

~ 9th: 33cpm peak at 18:11 hours UTC (isoated).

~ 12th: 37cpm peak at 14:58 hours UTC (isolated --1

minute only).

~ 12th: 33cpm peak at 19:30 hours UTC (isolated).

~ 13th: 34cpm peak at 12:04 hours UTC (isolated).

~ 18th: Found my counter program shut down --must

have forgotten to restart it.

~ 19th: 33cpm peak at 16:45 hours UTC (isolated).

~ 23rd: 33cpm peak at 02:43 hours UTC (isolated).

~ 27th: Early graph post, due to the lack of an expected

"background" count increase.

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

November - 2018

~ 1st: 33cpm peak at 15:13 hours UTC (isolated).

~ 4th: 35cpm peak at 10:46 hours UTC (isolated).

~ 13th: 34cpm peak at about 21:00 hours UTC (isolated).

~ 14th: 38cpm peak at 05:01 hours UTC (isolated).

~ 16th through 24th: Highest 24 hour averages since

January-February and highest build-up/decay type

24 hour averages in years. Peter Daley (who operates the

Sunshine Coast station)

showed our e-group weather maps which indicate this event is surely due

to a smoke

incursion from California's wild fires (well south of my station's Oregon

location).

I'm re-calibrating my inside station to corroborate

my outside station's excursions.

~ 29th: 34cpm peak at 15:17 hours UTC (isolated).

~ 30th: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

December - 2018

~ 8th: 33cpm peak at 04:08 hours UTC (isolated).

~ 15th: 34cpm peak at 11:25 hours UTC (isolated).

~ 18th: 34cpm peak at 17:21 hours UTC (isolated).

~ 21st: 33cpm peak at 20:49 hours UTC (isolated).

~ 27th: 33cpm peak at 01:40 hours UTC (isolated).

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

January - 2019

~ 14th: 33cpm peak at 06:11 hours UTC (isolated).

~ 14th: 34cpm peak at 19:34 hours UTC (isolated).

~ 24th: 33cpm peak at 00:34 hours UTC (isolated).

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

February - 2019

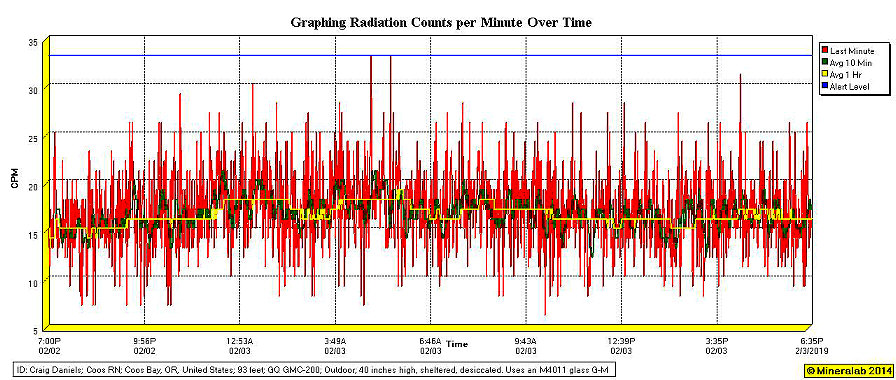

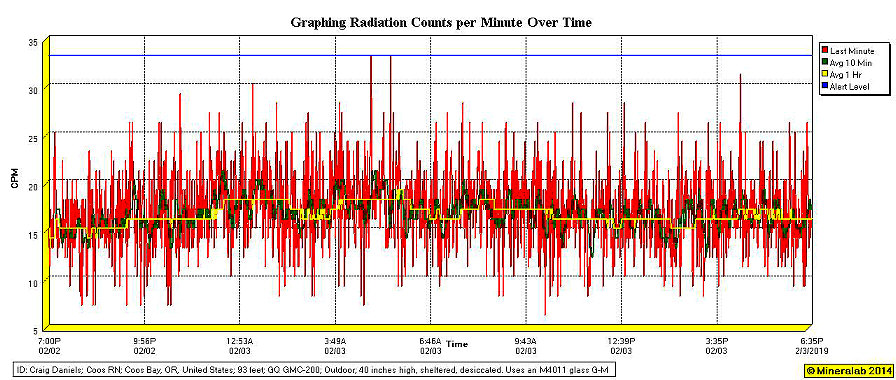

The day graph from 2/2/2019 to 2/3/2019 (Ctrl+

to enlarge the images.)

(Sorry for the 12 hour UTC format --and those inscrutable

time base intervals.)

~ 3rd: 33cpm peak at about 04:00 hours UTC

(isolated with soft build-up).

~ 3rd: 33cpm peak at 04:58 hours UTC (isolated

with soft build-up).

~ 4th: 33cpm peak at 07:47 hours UTC (isolated

with soft build-up).

~ 22nd: 34cpm peak at 03:26 hours UTC (isolated).

~ 28th: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

March - 2019

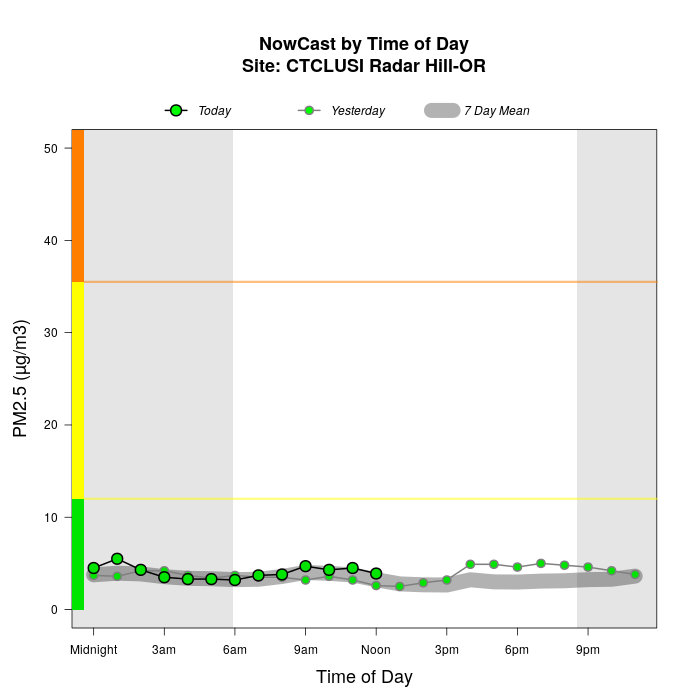

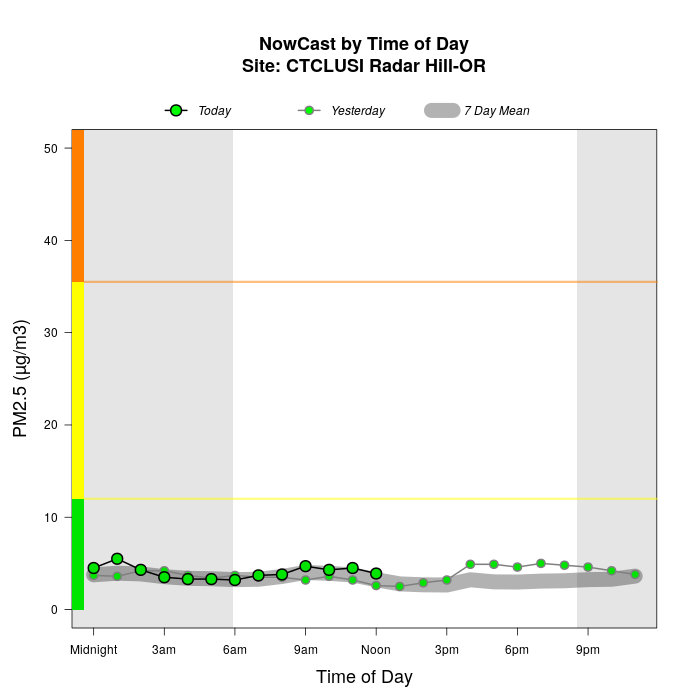

~ 31st: There's a monitoring station for smoke from regional

forest fires and slash burns that's only 1.6 miles from our outside radiation

monitoring station. It's on the Web and reports 2.5 micron size particulates

--as estimated micrograms per cubic meter of air. Maintained by a consortium

of volunteers, it posts hourly as well as daily averages. I've graphed

it until the 25th. There doesn't appear to be much correlation at these

low levels. No doubt we'll see some future mutual peaks --and I'll try

to access earlier smoke data as well. The smoke station is at about 285

feet (above MSL). My station's at 93 feet.

March - 2019 (continued)

~ 3rd: 33cpm peak at 23:30 hours UTC (isolated,

with a 31cpm leading spike).

~ 6th: 33cpm peak at 16:09 hours UTC (isolated,

but a busy graph today).

~ 22nd: 33cpm peak at 07:37 hours UTC (isolated, but

a busy^ graph today).

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

April - 2019

~ 17th: Cleaned bad connections --after losing the daily

total count.

~ 20th: 33cpm peak at 03:01 hours (UTC) (isolated).

~ 25-26th: Forgot and let the count run for 2 days.

Nothing lost (except daily resolution).

~ 30th: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

May - 2019

~ 3rd: 33cpm peak at (about) 09:00 hours (all

UTC) (isolated).

~ 3rd: 35cpm peak at 13:51 hours (isolated).

~ 4th: 33cpm peak at 10:05 hours (isolated).

~ 4th: 35cpm peak at 11:01 hours (isolated).

~ 6th: 33cpm peak at 11:31 hours (isolated).

~ 11th: 34cpm peak at 17:25 hours (isolated).

~ 14th: 36cpm peak at 11:56 hours (isolated).

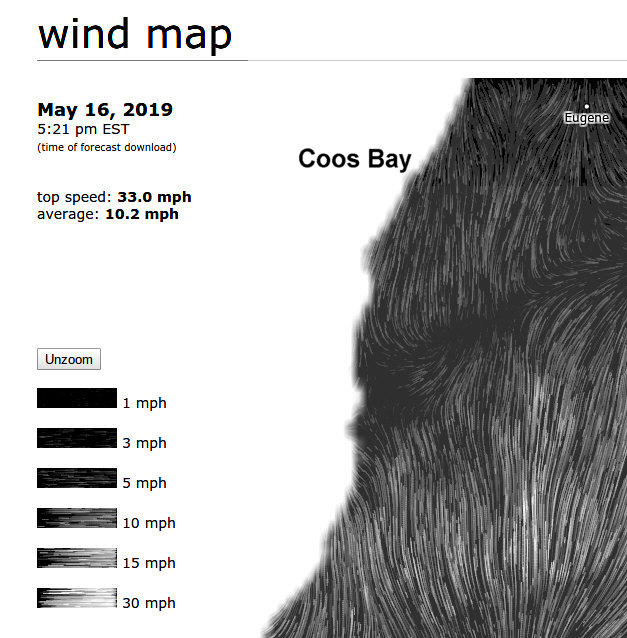

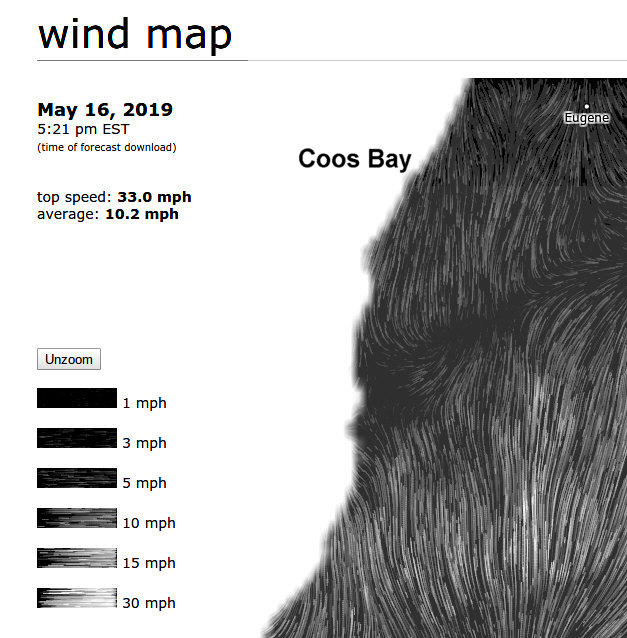

~ 13th through 16th: Looks like a mild build-up. It

started raining again, NASA Space Weather speaks of incoming coronal mass

ejections from the Sun, the wind is from the north, a smoke/particulate

station just 1.6 miles distant looks normal and about half of what it was

back in March. (I was waiting for such an increase

in order to compare the smoke graph and to standardize how to present it

here.)

Both displays are as of the 16th of May.

~ 16th: 33cpm peak at 11:30 hours UTC (isolated). (Saved

display screen due to mild build-up.)

~ 24th: 36cpm peak at 13:21 hours (isolated).

~ 26th: 33cpm peak at 05:31 hours (isolated).

~ 30th: 33cpm peak at 16:54 hours (isolated).

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

June - 2019

~ 6th: 35cpm peak at 15:08 hours UTC (isolated).

~ 7th: 33cpm peak at 10:57 hours (isolated).

~ 11th: 33cpm peak at 21:11 hours (isolated).

~ 24th: The Sun has been very quiet --no spots.

~ 30th: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

July - 2019

~ 7th: 34cpm peak at 05:22 hours UTC (isolated).

~ 8th: 35cpm peak at 01:59 hours (isolated).

~ 20th: 33cpm peak at 12:20 hours (isolated).

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

August - 2019

~ 3rd: 33cpm peak at 04:03 hours UTC (isolated).

~ 12th: 34cpm peak at 03:42 hours (isolated).

~ 19th: 33cpm peak at 07:08 hours (isolated).

~ 27th: 35cpm peak at 14:17 hours (isolated).

~ 31st: 33cpm peak at 12:24 hours (isolated).

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

September - 2019

~ Due to distractions, I several times let the

counter run on for 2 or 3 days. No data or peaks were lost.

~ 15th: 33cpm peak at 18:44 hours UTC (isolated).

~ 16th: 37cpm peak at 07:03 hours (isolated).

~ 18th: 34cpm peak at 10:13 hours (isolated).

~ 21st: 33cpm peak at 21:27 hours (isolated).

~ 30th: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

October - 2019

~ There are runs of more than one day, but no data

or peaks were lost. Fortunately, the counts have been calm.

~ 8th: 35cpm peak at 06:55 hours UTC (isolated).

~ 9th: 33cpm peak at 06:27 hours (isolated).

~ 11th: 33cpm peak at 17:19 hours (isolated).

~ 23rd: 34cpm peak at 00:01 hours (isolated).

~ 11/01: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

November - 2019

~ 7th: 34cpm peak at 01:38 hours UTC (isolated).

~ 9th: 34cpm peak at 17:23 hours (isolated).

~ 13th: Power failure + backup battery failure

= no data today.

~ 18th: 34cpm peak at 00:37 hours (isolated).

~ 27th: I'm guessing this is about the California

wild fires. I tried to access a nearby smoke

monitoring station but data for these past two days has been lost.

(They seem to lose a lot of data.) There were no threshold passing peaks.

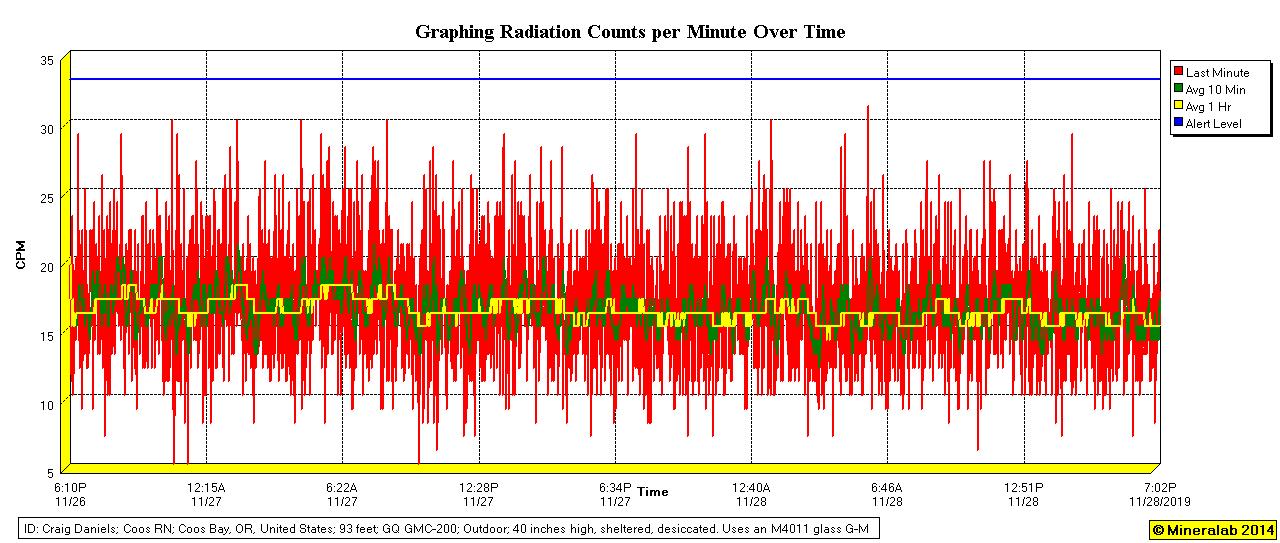

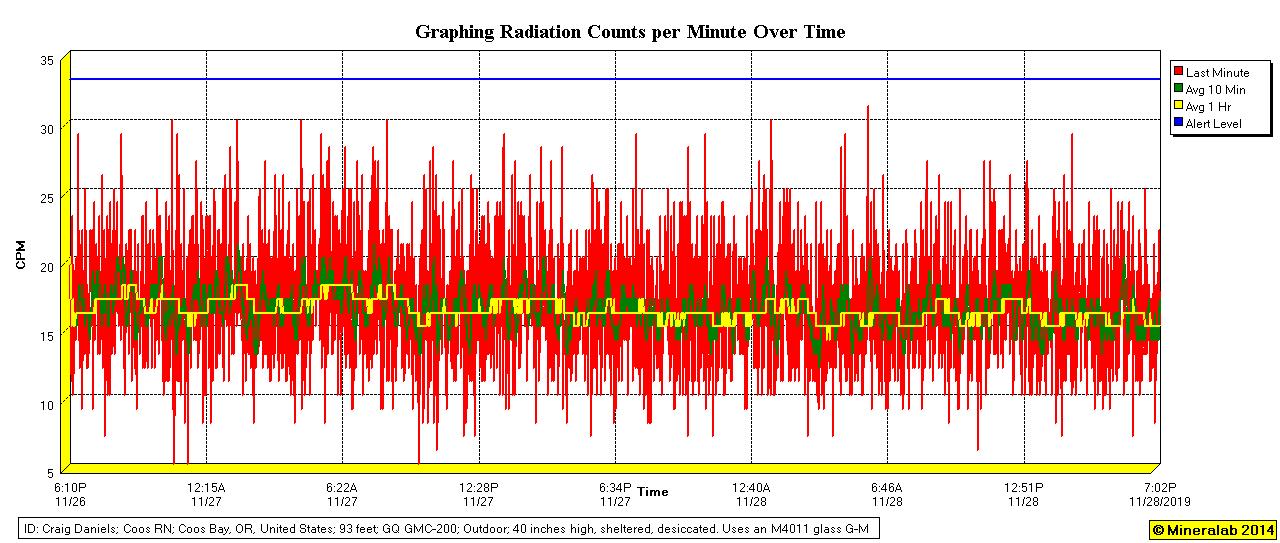

Here's the daily Geigergraph record:

Minute by minute Geigergraph for 11/27-28 (click to

enlarge)

~ 29th: 33cpm peak at 05:15 hours (isolated).

~ 30th: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

December - 2019

~ 10th: Unusually high 39cpm peak at 00:37 hours UTC

(quite isolated).

~ 13th: 34cpm peak at 16:11 hours (isolated).

~ 26th: 33cpm peak at 20:54 hours (isolated).

~ 29th: 33cpm peak at 22:43 hours (isolated).

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

January - 2020

~ 2nd: 33cpm peak at 02:26 hours UTC (isolated).

~ 21st: 34cpm peak at 06:37 hours (isolated).

~ 23rd: 33cpm peak at 17:55 hours (isolated).

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

February - 2020

~ 28th: 33cpm peak at 02:43 hours UTC (isolated).

~ 29th: 35cpm peak at 10:40 hours (isolated).

~ 29th: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

March - 2020

~ 29th: 33cpm peak at 22:55 hours UTC (isolated).

~ 29th: 33cpm peak at 22:55 hours UTC (isolated).

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

April - 2020

~ 3rd: 33cpm peak at 04:20 hours UTC (isolated).

~ 3rd: See the air sample

decay graph.

~ 9th: 34cpm peak at 16:17 hours (isolated).

~ 16th: 33cpm peak at 12:47 hours (isolated).

~ 17th: 33cpm peak at 00:44 hours (isolated).

~ 18th: Due to statistical count variations and/or

actual count variations, both in the

running

base line and the draw filter counts (note the 10 minute average trace

below),

there's no recognizable decay curve at this low level.

~ 30th: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

The 4/18 air draw P1-P4 counts spanned the last hour

of this (outside station) graph (click to enlarge)

May - 2020

~ 15th: 33cpm peak at 10:46 hours (isolated).

~ 26th: 34cpm peak at 03:15 hours (isolated).

~ 29th: 36cpm peak at 04:33 hours (isolated).

~ 30th: 33cpm peak at 03:28 hours (isolated).

~ 31st: No other threshold (33cpm) crossing peaks

as of this evening (PST/PDT).

June - 2020

~ 19th: 34cpm peak at 14:21 hours (isolated).

~ 30th: 34cpm peak at 11:52 hours (isolated).

~ 30th: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

July - 2020

~ 3rd: 33cpm peak at 10:53 hours (all UTC) (isolated).

~ 10th: 33cpm peak at 01:04 hours (isolated).

~ 11th: 34cpm peak at 03:58 hours (isolated).

~ 16th: 35cpm peak at 13:25 hours (isolated).

~ 17th: 37cpm peak at 10:09 hours (isolated) --no

build-up).

~ 28th: 33cpm peak at 10:04 hours (isolated).

~ 31st: No other threshold (33cpm) crossing peaks

as of this evening (PST/PDT).

August - 2020

~ 4th: 35cpm peak at 15:14 hours (all UTC) (isolated).

~ 16th: Saved a normal looking (if somewhat high

day's average) screen capture.

~ 22nd: 33cpm peak at 08:41 hours (isolated).

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

September - 2020

~ 1st: 35cpm peak at 12:15 hours (all UTC) (isolated).

~ 8th: Smoke from Oregon's forest fires

blotted out the Sun at local noon, seemingly replaced by fog (and

smoke)

on the morning of the 12th (as I type this note). Although an air draw

filter (at 00:30 hours

on

the 10th - UTC) showed a significant count (with an unusual decay curve/step),

my daily 24 hour

averages

were barely affected (implying mutations and beta in the filter --see the

blue P2 & P5 dots).

I washed

the exterior of the outside station's housing on the morning of the 11th,

but the subsequent

count

downturn doesn'tseem statistically significant.

~ 10th: 33cpm peak at 06:11 hours (isolated).

~ 11th: 33cpm peak at 16:17 hours (isolated).

~ 13th: This morning's count was running at 108%,

so I did another air draw, getting a high (but not record)

count

from the filter and a decay curve more typical of radon daughters. (No

rain here for a long time,

and

little or no sunshine on the sand and soil --so it has to be coming in

with the smoke --sourced

100

miles away, where the radiation must be pretty high.) The day's peak minute

count was only 30.

It's

my guess that our intense Oregon fires are liberating radon from forest

floors.

~ 17th: 33cpm peak at 09:32 hours (isolated).

~ 19th: 35cpm peak at 07:53 hours (isolated).

Smoke has cleard with rain.

~ 28th: 34cpm peak at 09:28 hours (isolated).

~ 30th: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

October - 2020

~ 2nd: 33cpm peak at 14:53 hours (isolated)

(all UTC).

~ 16th: Apologies! --I made a 16th day update

graph but didn't post it this month, (Getting old.)

~ 27th: 34cpm peak at 14:40 hours (isolated).

~ 31st: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

November - 2020

~ November 2nd: I did a "base

line" beach wander today at 22:15 hours UTC. These wanders/walks will only

be on Bastendorff Beach (from here on), will follow a recent high tide

line and prominent secondary froth lines, in order to audibly detect any

discrete, gamma emitting hot particles such as "fuel fleas". (I've yet

to find any.) These will be 10 minute walks (per the Geiger counter's internal

timer) at about 30 inches above the sand. The count was 96% of normal today.

(Click to enlarge)

~ November 2nd: It became clear,

when looking at my 11/2 to 11/3 graph, that there was a minor event centered

on 18:00 hours UTC (late morning -PST- of 11/2 here, displayed as "6pm"

UTC on this graph). The 10 minute and 60 minute averages reached 20cpm

(128% of normal, that small circle on the November graph), then took about

90 minutes to settle down (and in plenty of time for my "beach wander").

Never the less/more, the 11/2-11/3 24 hour average ended up at only 103.2%.

(The previous 24 hours averaged to 104.9%.) (I might have missed previous

such events/waves. I have no idea of its significance, or even of what

I'm counting.)

~ 3rd: 34cpm peak at 22:24 hours (isolated)

(all UTC).

~ 9th: 33cpm peak at 14:59 hours (isolated).

~ 21st: 34cpm peak at 19:47 houea (isolated).

~ 23rd: 37cpm peak at 01:19 hours (isolated).

~ 29th: 34cpm peak at 18:34 hours (isolated).

~ 30th: No other threshold (33cpm) crossing peaks

as of this morning (PST/PDT).

(See also: current/recent monitoring)

Notes and Comments

* January 31st, 2020: Japan has

decided to slowly drain the vast storage of radioactively contaminated

water --into the ocean. If the "linear" theory of radiation induced casualties

is correct, slow or fast release (over a wide range of rates) will make

little difference to the collective health of peoples who harvest sea food.

a kilogram of a radio nuclide, dilluted into a million kilograms of water

will damage/sicken people at rate "X", while the same kilogram, dilluted

into 100 million kilograms of water --will damage 100 times as many people

at the rate of 1/100th X.

March 31st update: After thinking on it (the above

paragraph), the more concentrated release might damage fewer people, do

to "wasting" much of the contaminant as over-kill. It's just the news headlines

which would look worse.

* The May 18, 2017 edition of Space

Weather (use their archives retrieval utility, top right of page) carries

an article describing the recent discovery that very low frequency radio

communications to our nuclear submarine fleet also resonate the Earth's

magnetosphere, forming a belt around the Earth. That belt appears to be

offering protection to satellites (and space farers?) against "killer electrons"

--presumably born of primary cosmic rays. That outcome is an accidental

byproduct of (what I gather to be) near 24/7 radio transmissions since

the 1960s, so we might not have ever been able to study the Earth's normal

deployment of its magnetic field and radiation belts.

What I want to know: if high energy

electrons (beta radiation) can be "killers" of space electronics, then

tell me more about

muons --which carry the same

negative charge, move at relativistic speed, are over 200 times more massive

than electrons, might constitute half of our normal "background radiation"

near sea level, and much more at higher altitudes. How is it that the health-physics

people assign muons the same biological impact as gamma and X-rays? (Somehow,

they're alleged to shed/impact very little energy per foot of travel.)

* It looks like 1800 tons of "spent" fuel rods are

going to be sequestered indefinitely a little more than 100 feet back from

the high tide line as the San Onofre nuclear power plant in California

gets fully decommissioned. At least it will be out of the poorly protected

pools, but this stuff must be kept safe for thousands of years, since spent

fuel is a far worse radioactive hazard than fresh rods. If I read the news

correctly, the outer containers, made of 5/8" stainless steel (instead

of the European standard of 20 inches), will only be half buried --perhaps

due to the water table. (Stainless steel deteriorates in a watery environment,

which is why maritime hardware is made of bronze.) Be my guess that, with

the rising sea level, corrosion will make the containers and their contents